Creating a beautiful, functional website is only the first step.

The real challenge begins when it’s time to optimize for conversions, engagement, and business outcomes. Teams pour countless resources into attracting visitors, but what happens next? How do you ensure every click counts?

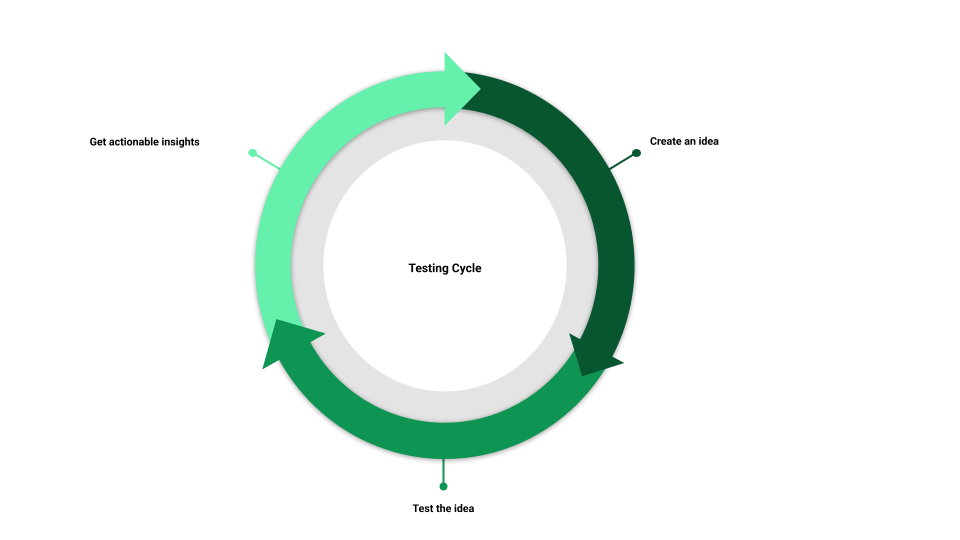

At Webflow, we set out to answer that question with Webflow Optimize – an AI-powered tool designed to help customers test, analyze, and improve their websites. Our approach follows a structured cycle: idea generation → testing → actionable insights → iteration.

Webflow Optimize streamlines the user experience for marketers to create, test, and analyze their website content, saving them valuable time. This innovative solution leverages a sophisticated system that combines proprietary neural network models and generative AI.

This blog post will delve into the engineering choices, trade-offs, and solutions that drive Webflow Optimize's optimization engine and illuminate how AI is harnessed to empower marketers.

AI suggestions: Accelerating idea creation

Generating high-impact website improvements – such as headlines, layouts, calls to action (CTAs) – is often slowed by creative blocks and time constraints. To address this, we built generative AI tools like Suggest Copy and the AI Landing Page Generator.

Suggest Copy helps marketers craft compelling headlines, CTAs, and other types of variations. The AI Landing Page Generator goes a step further, proposing both text and precise placement suggestions tailored for new landing pages.

As with many applications built on large language models (LLMs), a key challenge is ensuring that outputs are not only inspiring, but also highly relevant to the user’s context. For the AI Landing Page Generator, this also meant ensuring that the proposed placements could integrate seamlessly into the structure of an existing page.

To tackle these challenges, we built a webpage “crawler” to collect metadata about the target webpage, including detailed context around the areas being tested. We also retrieve live testing information from our internal system to give the model a complete view of the environment. Because relevance to the context is essential, we created interactive playgrounds where users could test suggestions and provide feedback. That feedback was distilled into our prompts.

To validate the outputs from the AI Landing Page Generator, we use our placement validators to ensure the AI responses are legitimate. In some cases, a final touch is inevitable – specifically, correcting common errors in AI-generated code using specialized programs. Doing this final validation for part of our “last mile” approach greatly boosted the accuracy of the output.

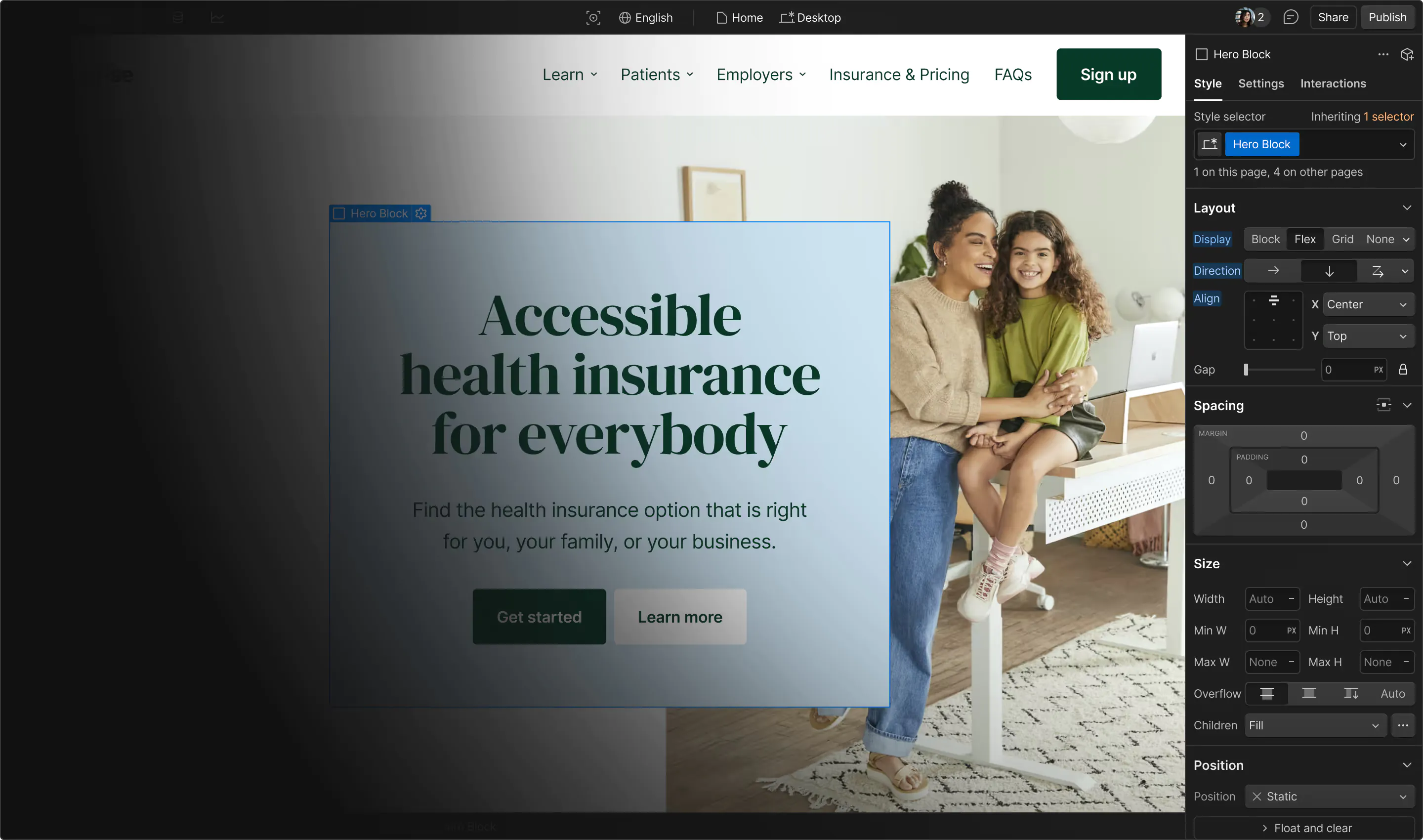

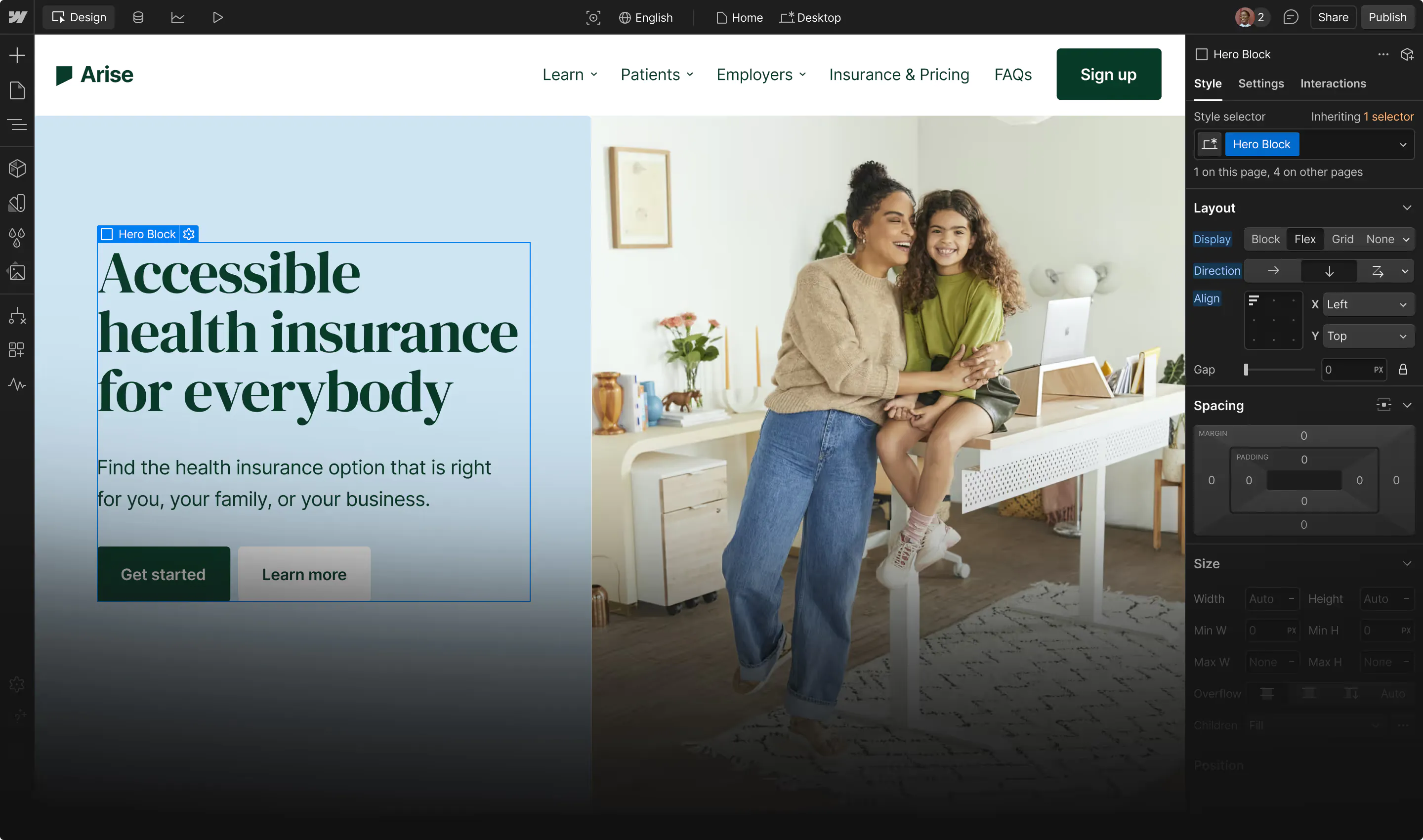

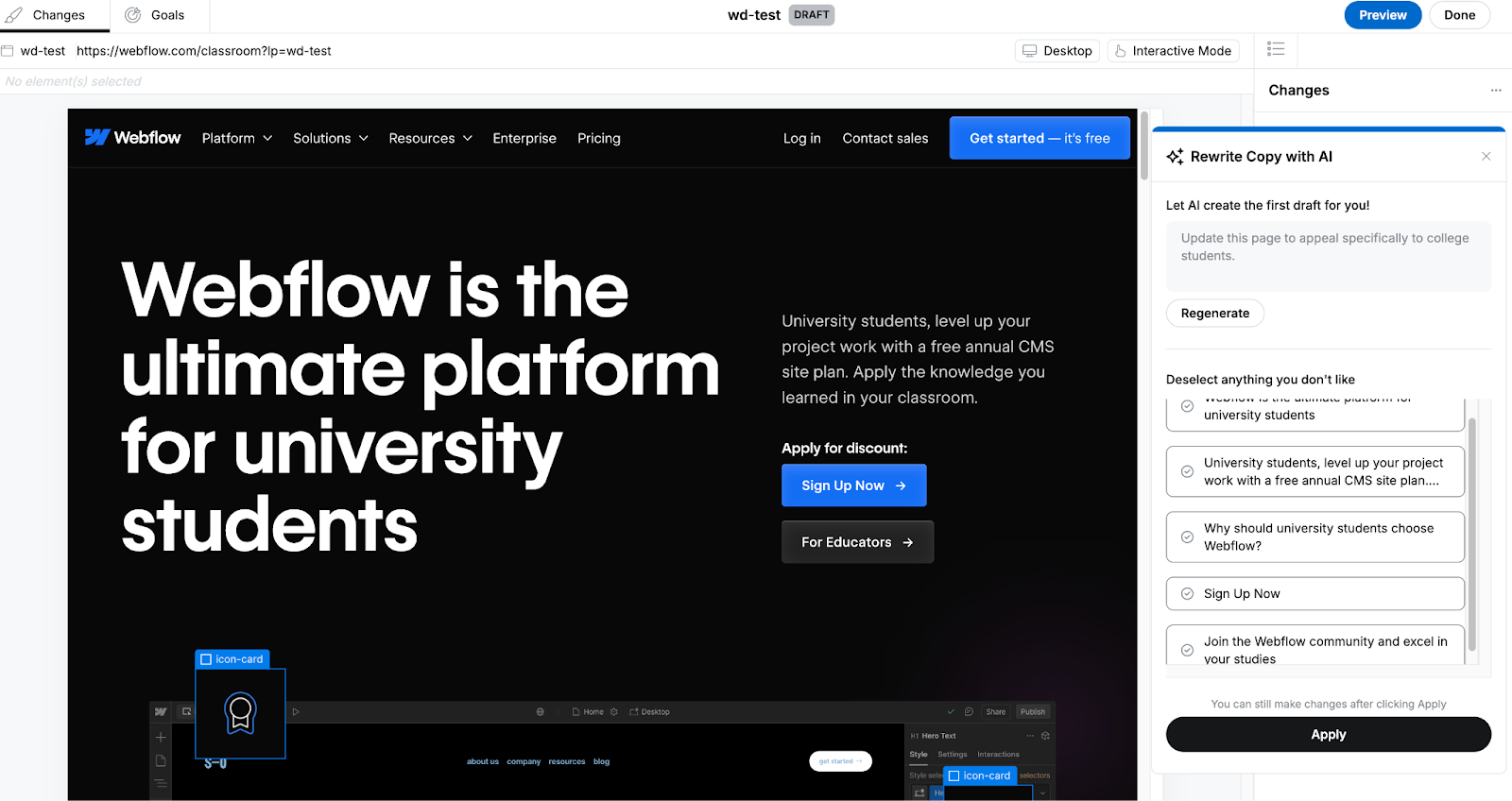

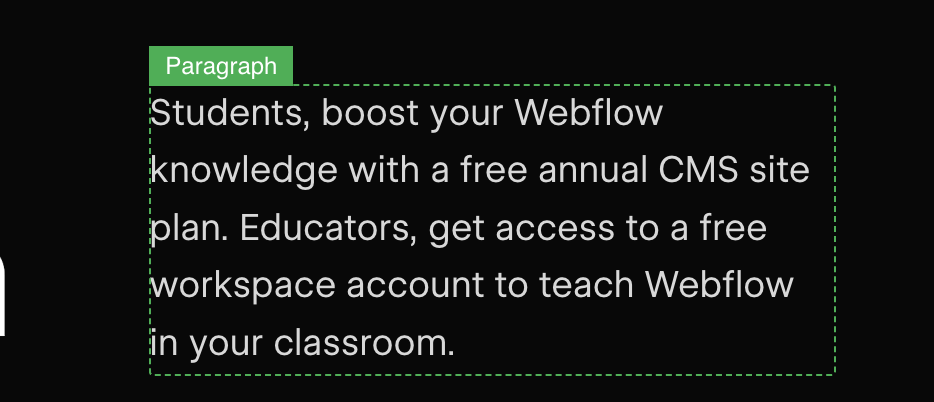

Below are some screenshots that present an example of our solutions.

We prompted the AI Landing Page Generator to create an updated webpage with the instruction: “Update this page to appeal specifically to college students.” In response, it suggested five changes in a list format.

In the two screenshots below, we see that the updated text explicitly refers to “university students” rather than the more generic “students.” The AI’s output was contextually relevant, aligning well with both the surrounding page content and the original request.

We can also see that AI suggested updates to two heading items separately, showing that different headings are uniquely identified.

AI-optimized tests: Engineering for dynamic traffic allocation

Traditional A/B testing splits traffic randomly across variations, which works, but it can be inefficient. Poor-performing variations waste traffic. Moreover, the method assumes a static environment, but in reality a winner identified over a specific period might not remain the best option in the future.

Webflow Optimize takes a smarter approach. Using Bayesian neural networks, we dynamically allocate more traffic to better-performing variations as the test progresses. Initially, traffic is distributed evenly across all variations. But as data accumulates, the model begins to predict the probability of each variation being the best. Based on these estimations, more traffic is steered towards the stronger variations – while still continuing to explore others to avoid overconfidence. When performance trends shift, traffic allocation shifts accordingly, ensuring that emerging winners receive greater exposure.

Characteristics of web traffic

It is the dynamic and continuous optimization nature of our approach that sets it apart from the traditional multi-armed bandit algorithms. Why is dynamic and continuous optimization so important? Because a website is never static. In practice, we see this play out in many ways. Content frequently changes to support new product launches, time-limited promotions, or event-based campaigns. The sources of incoming traffic shift regularly as ad campaigns evolve. Even patterns in traffic volume vary throughout the week, with clear differences between weekdays and weekends.

To account for these domain-specific dynamics, our training processes are designed with several technical considerations in mind. First, we apply weighted sampling to ensure that traffic patterns are balanced over a longer time horizon. Second, we include contextual features such as ad campaigns, traffic source patterns, and device types into our models to help us tease apart confounding factors that might otherwise skew variation performance.

Why Bayesian neural networks?

Bayesian neural networks (or neural networks trained through Bayesian methods) are a well-known framework with extensive literature and broad applications [reference 1, reference 2]. They offer two key advantages that make them especially suitable for our setting: quantifying uncertainty and preventing overfitting.

Quantifying uncertainty helps the system balance exploration (trying all variations) with exploitation (focusing on strong candidates). Preventing overfitting is particularly useful in domains where data is scarce. While web traffic may seem large, the number of samples for any specific combination of feature values is often quite limited.

Taken together, these benefits make Bayesian neural networks particularly well-suited to the volatile nature of web traffic, especially when models must account for many interacting and impactful features.

Training

Whenever you train a neural network model with a large number of samples, efficiency is a major concern. Here are some tactics that we use in our training pipeline to boost efficiency:

To accelerate training, we use Apache Spark on AWS EMR (Elastic MapReduce) for efficient and scalable feature preparation. AWS EMR dynamically scales compute resources, enabling us to process large datasets quickly and cost-effectively. Once the features are prepared, we leverage AWS Batch for TensorFlow model training, allowing us to run up to 10,000 training jobs in parallel for individual customers.

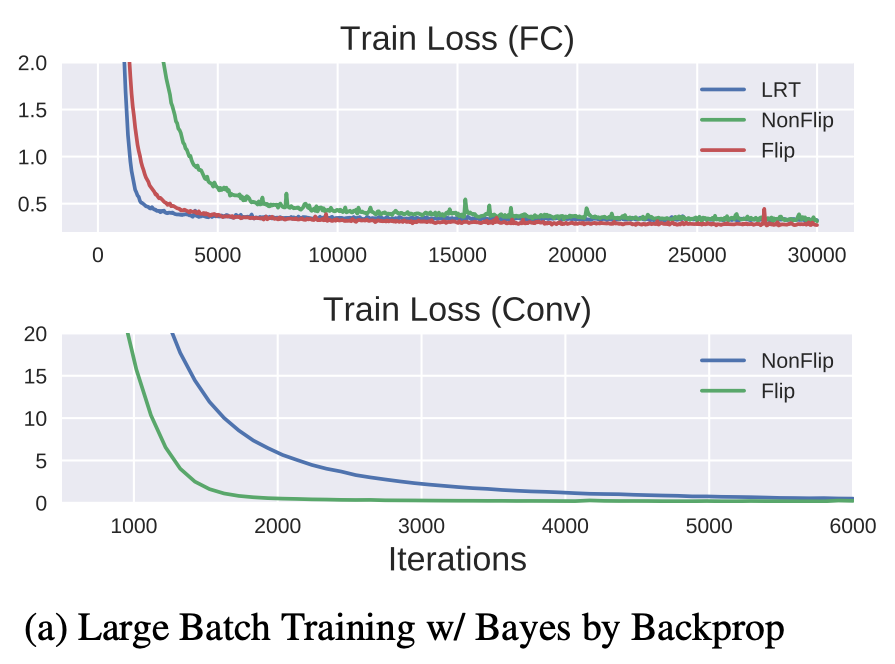

In training a Bayesian neural network, we need to sample the weights based on their estimated distributions. It would be prohibitively expensive if we did this naively for every sample. The Flipout trick [reference 3] provides an efficient implementation of the equivalent group sampling, so that’s what we used in training.

When we examined memory usage during training, we found that it clearly increased as training batches/iterations progressed. That indicated a memory leak. The root cause was a bug in TensorFlow’s tf.py_function, which is not an issue for tf.numpy_function [reference 4]. We refactored our code to use tf.numpy_function when necessary.

In general, training a neural network involves a great deal of detailed tuning – not only for the model’s performance, but also for training efficiency. There’s no shortcut; you must test extensively.

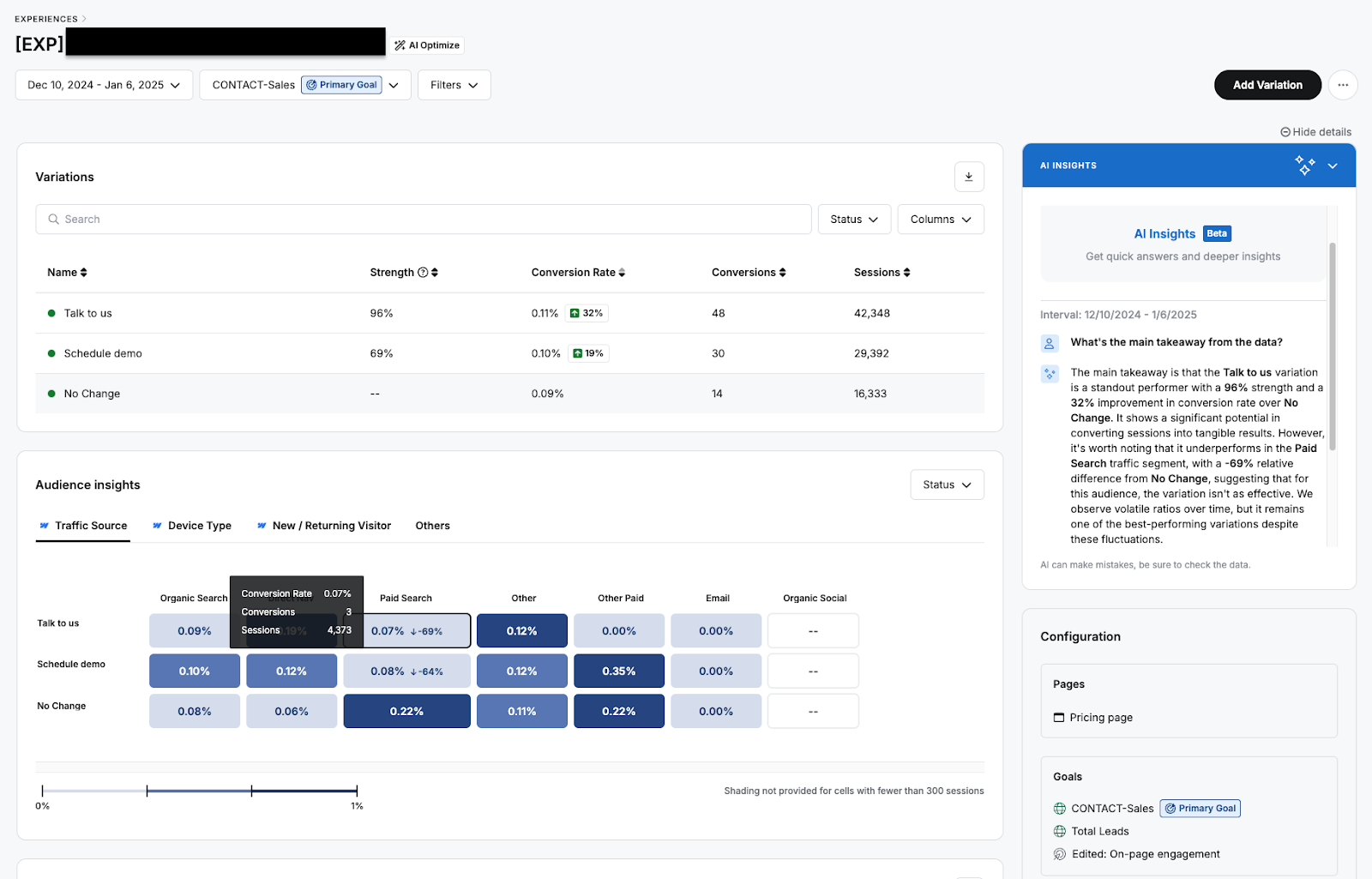

AI insights: Turning data into insights

Running tests is one thing; interpreting the results is another. Experiment results often include complex metrics and a great deal of information from various dimensions, making it difficult to identify clear, actionable insights.

To simplify this, we built the AI Insights feature, which uses LLMs to analyze test outcomes. This LLM-powered tool highlights key patterns, answers questions, and summarizes the experiment results, saving teams time and effort.

Using LLMs

From an engineering perspective, this feature posed challenges similar to those in AI suggestions, though with different details. These included:

- Context management: LLMs need a structured understanding of the experiment data to provide accurate answers.

- Performance optimization: Reading and synthesizing large datasets is challenging.

- Professional jargon: The insights should be in language that marketers understand.

To address these issues, we adopted several engineering strategies. We leveraged assistant and thread APIs for AI Insights, following a chatting format with chat history maintained in the threads. Since the UI alone doesn’t carry all necessary background information, we equip the assistant with product “manuals” by placing them in its system context, enabling more grounded and relevant responses.

Instead of trying to load and summarize all data at once – which is infeasible at scale – we take a multi-stage approach. When a session begins, the assistant preloads information shown in the default dashboard view. To enrich the initial response, we also preload several frequently referenced stats, even if they are hidden in the default view.

As the conversation continues, the assistant can dynamically fetch additional statistics through function tool calls. These stats are the same ones surfaced through our server APIs, but we expose a simplified version specifically for the LLM. This design helps reduce token usage and makes the data easier for the model to interpret, given its limited visibility into our internal systems.

To round out the experience, we carefully prompt the assistant to communicate in language that resonates with marketers, making technical insights easier to understand and act on.

Evaluations

Robust evaluation is critical for any LLM application, and we’ve built a multi-layered system to assess quality and guide improvements.

We begin with tool-level accuracy, where it’s straightforward to determine whether the assistant triggered the correct functions. On top of that, we use a custom “LLM judge” – an assistant prompted to act like a savvy marketer – to provide automated quality assessments of generated responses.

Finally, we incorporate human feedback. Internal reviewers from across the company regularly evaluate outputs, providing scores and comments that help calibrate both the assistant and the automated judge. Post-launch, we continue improving through real-world signals: customer feedback helps us evolve both the product and the evaluation criteria, including how the LLM judge itself is tuned.

UI/UX

We also want to emphasize the critical importance of UI/UX design. Effective UX techniques are pivotal in addressing common challenges associated with using LLMs, such as lengthy response times for data analysis, inconsistent analysis quality, and limited user engagement. The use of conversational dialogs – rather than static summary reports – combined with suggested initial and follow-up questions, is particularly impactful.

This approach not only mitigates the challenges mentioned above but also delivers a significantly enhanced, interactive user experience. It feels as though the user is consulting with an expert, guiding them to iteratively refine their questions for deeper, more precise insights.

Engineering for speed, scalability, and accuracy

At the heart of Webflow Optimize is a deliberate focus on handling massive data loads in real time without compromising the quality of insights. We achieve this by using generative AI to inspire new test ideas, Bayesian neural networks to dynamically adjust traffic distribution, and LLMs to transform raw metrics into clear recommendations. Together, these technologies empower marketers to optimize their websites quickly and effectively, all while supporting tens of thousands of tests across diverse traffic patterns.

Looking ahead

Webflow Optimize is just getting started. As AI technology evolves, our goal is to continue building tools that empower teams to optimize websites effortlessly, making every visitor interaction count. Behind every AI-powered tool lies a series of engineering challenges: balancing speed with scalability, inspiration with accuracy, and creativity with structure. In Webflow Optimize, we’ve brought together proprietary models and LLMs to deliver a robust solution for website optimization. We will continue training our own models and leveraging state-of-the-art multimodal models alongside agentic frameworks.

References

We’re hiring!

We’re looking for product and engineering talent to join us on our mission to bring development superpowers to everyone.