"Performance is a feature" is a common refrain on the engineering team at Webflow.

So when Dashboard loading times started to climb a few months ago (we saw P95s as high as 16.24s) we started brainstorming ways to improve this slow experience for our users. And we agreed on an ambitious goal: to reduce initial load times by at least 20%.

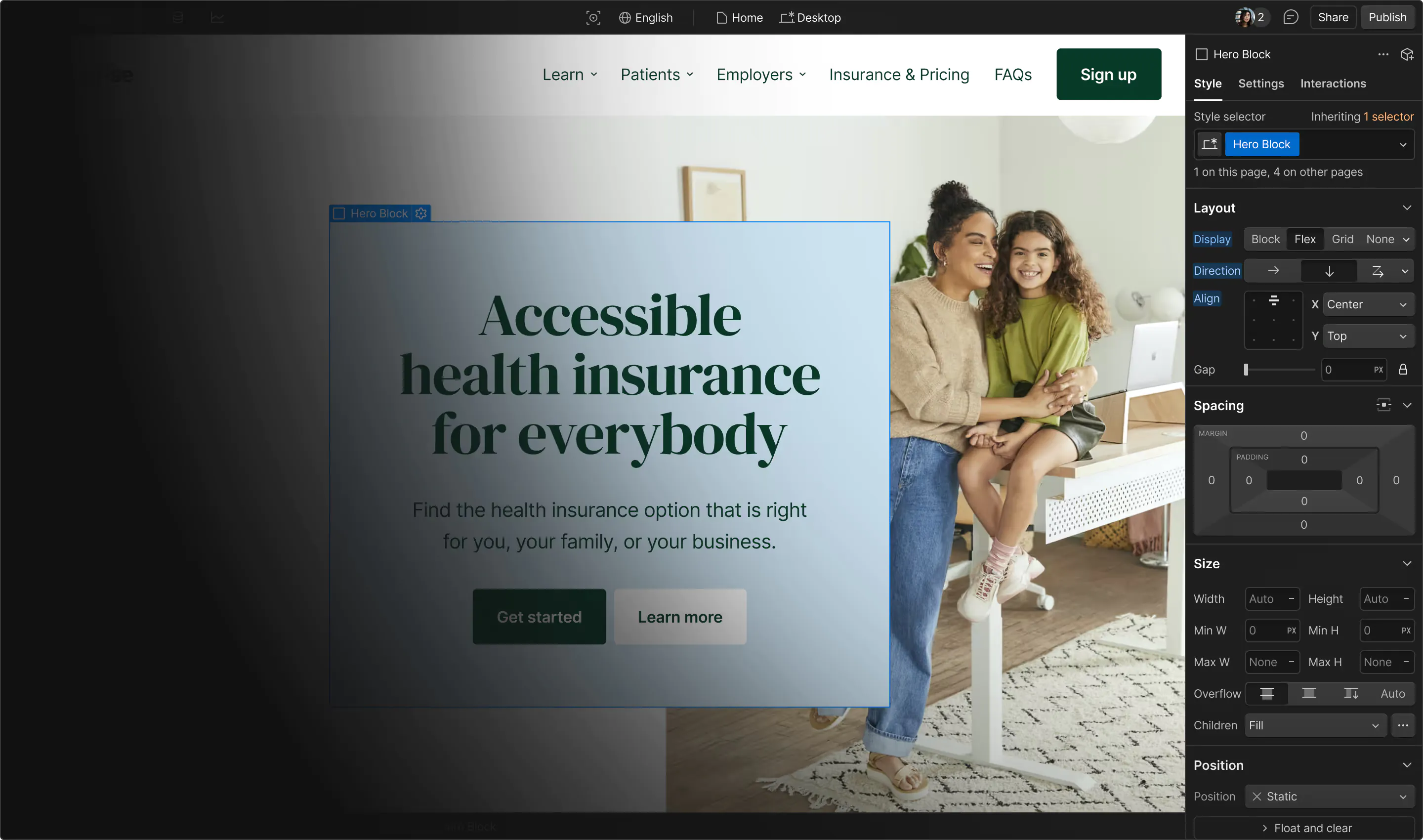

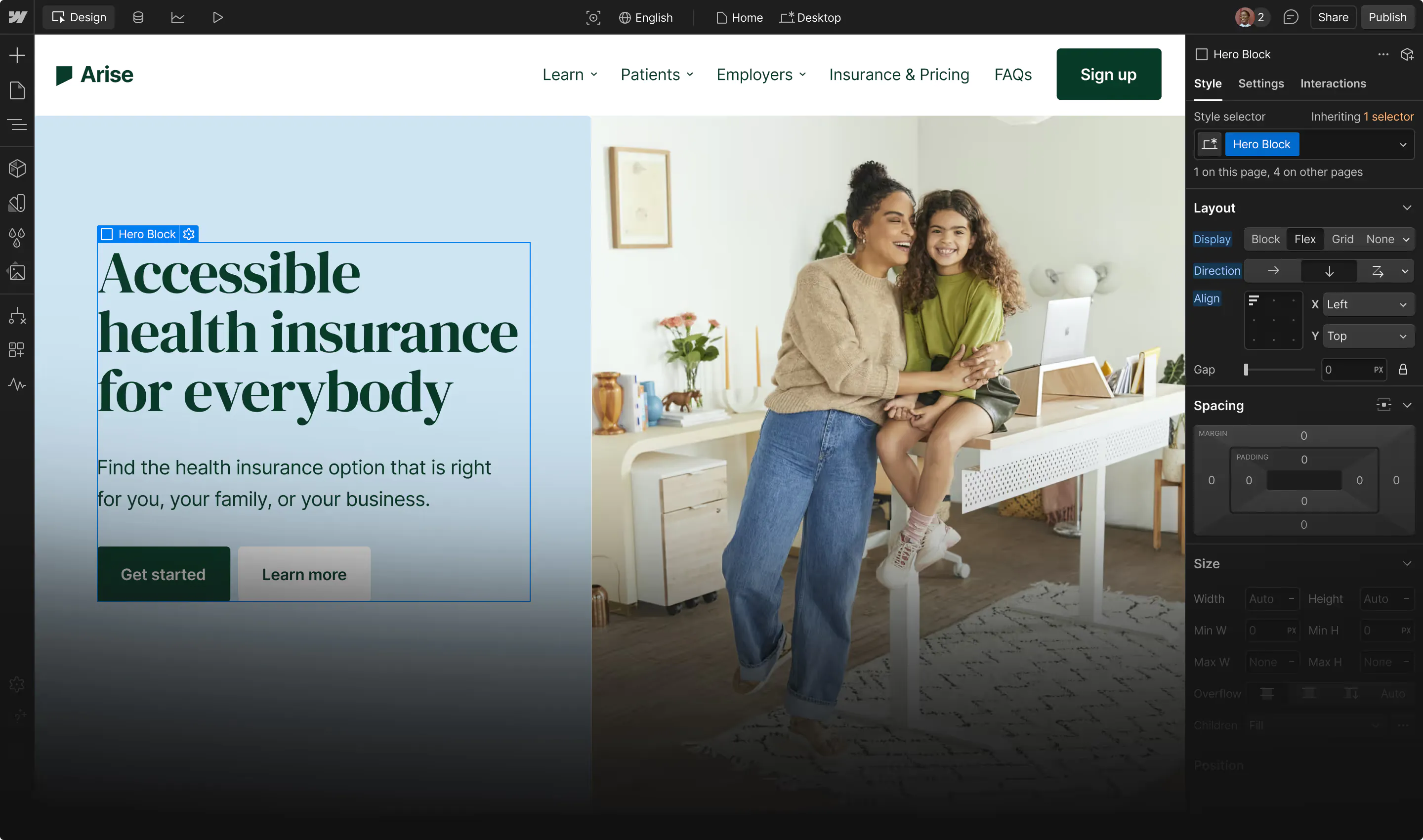

After some investigation, we decided that the highest-leverage change would come from moving off our client-based SPA architecture (based on React Router) and onto a server-side rendered solution (we chose NextJS for its large feature set and frequent updates). We reasoned that a server-rendered application would give us more fully rendered HTML on our initial request and decrease the number of client-side API requests. It would also package critical data with the initial rendering of the Dashboard, enabling the user to interact with the page sooner.

But large migrations are difficult. We wanted to make sure that any migration met the following requirements:

- We had to be able to migrate page routes incrementally

- We wanted (the majority of) Dashboard developers to be able to write code without having to know the details of this migration

Requirement 1 meant we had to direct traffic between the two different versions of the Dashboard part of the product on a route-by-route basis, and requirement 2 meant we had to refactor framework-specific APIs into higher order components that could be removed once we had migrated to NextJS.

Migrating routes individually

One of Webflow’s recent infrastructure changes enabled us to work at a higher level of abstraction without much development effort: turning Webflow’s monolith-type architecture into a series of smaller applications within the context of the webflow.com codebase. With this setup, we were able to run the NextJS instance as its own application, while sharing existing components from the current app and using the nginx reverse-proxy directive to direct traffic to the client- or server-side application on a route-by-route basis. An early nginx template looked like this:

As the migration progressed, we extended this rule to add additional routes. If a route started having elevated error rates we could easily revert the configuration change to divert traffic back to the React application while we troubleshot the root cause.

Keeping route changes independent of the application code maintained clarity and required one less fork in the application code itself. Engineers unfamiliar with the re-architecture project could, for the most part, continue working on the existing application unimpeded. In general, the more forks people encounter in code, the more potential outcomes they have to hold in their memory. By reducing mental load we made our engineers more productive and less error-prone.

Migrating shared components

We had two primary concerns for the components shared between the server-side rendered application and the existing client application: ensuring components did not try to access browser-based APIs when being rendered on the server, and managing the different methods that NextJS and React Router use for accessing browser-based objects. To minimize complexity, we introduced a server-side detection function, a new React Context that allowed any React component to determine what framework it was running in, and a series of custom hooks that utilize this new React Context.

Server-side detection function

First, we added a utility function that keeps us from creating errors in the application when we need to execute code that depends on the server or client environment:

For example, we have a different set of data retrieval URLs for server-side and client-side requests, and we use the isServerSide function to tell us which set of URLs to use:

There are also cases where we wanted to utilize the window object, and this would keep the NextJS app from trying to do so when working on the server and erroring out:

Framework context and custom hooks

A thornier issue is distinguishing between the NextJS and existing application when retrieving pathname and query parameters on the client. Both React Router and NextJS have hooks for this type of data, and those hooks prove lethal when applied within the wrong app context. We took advantage of React.createContext and made custom hooks which read the context and execute the appropriate React Router functionality or NextJS functionality:

Performance results and next steps

Of the three major routes we’ve had in production so far, we’ve seen significant improvement on average, including a 19% decrease in P95 times for initial load, coming close to our initial goal of a 20% reduction.

Big swings in the scope of improvement (e.g. one page showing an almost 50% decrease and another showing a 15% percent decrease in P95) indicate that there is more work to be done tuning individual pages, but we now have increased control over how data and components are loaded in the server / client lifecycle.

We are continuing our effort by moving more pages and their data to loading on the server, and identifying more components that can be rendered on the server instead of the client. Users can expect the migrated pages to respond quicker on the initial page load and a smoother experience as their browser handles less of the responsibility for the initial render.

We’re hiring!

We’re looking for product and engineering talent to join us on our mission to bring development superpowers to everyone.