Large language models make it easy to build AI features. They have inspired our shared imagination for what we can do with AI, as it’s easy to build applications using publicly available APIs from OpenAI, Anthropic, and others.

While the publicly available APIs used to access LLMs present a fairly run-of-the-mill workflow for developers, the task of calling an API and handling its response misrepresents the true complexity of the problem. When building features on top of large language models, you’ll mostly be working at the edges of the model's capabilities. Doing this effectively requires skills that most software engineers haven’t had to develop yet, including those from related but fundamentally different disciplines like data science.

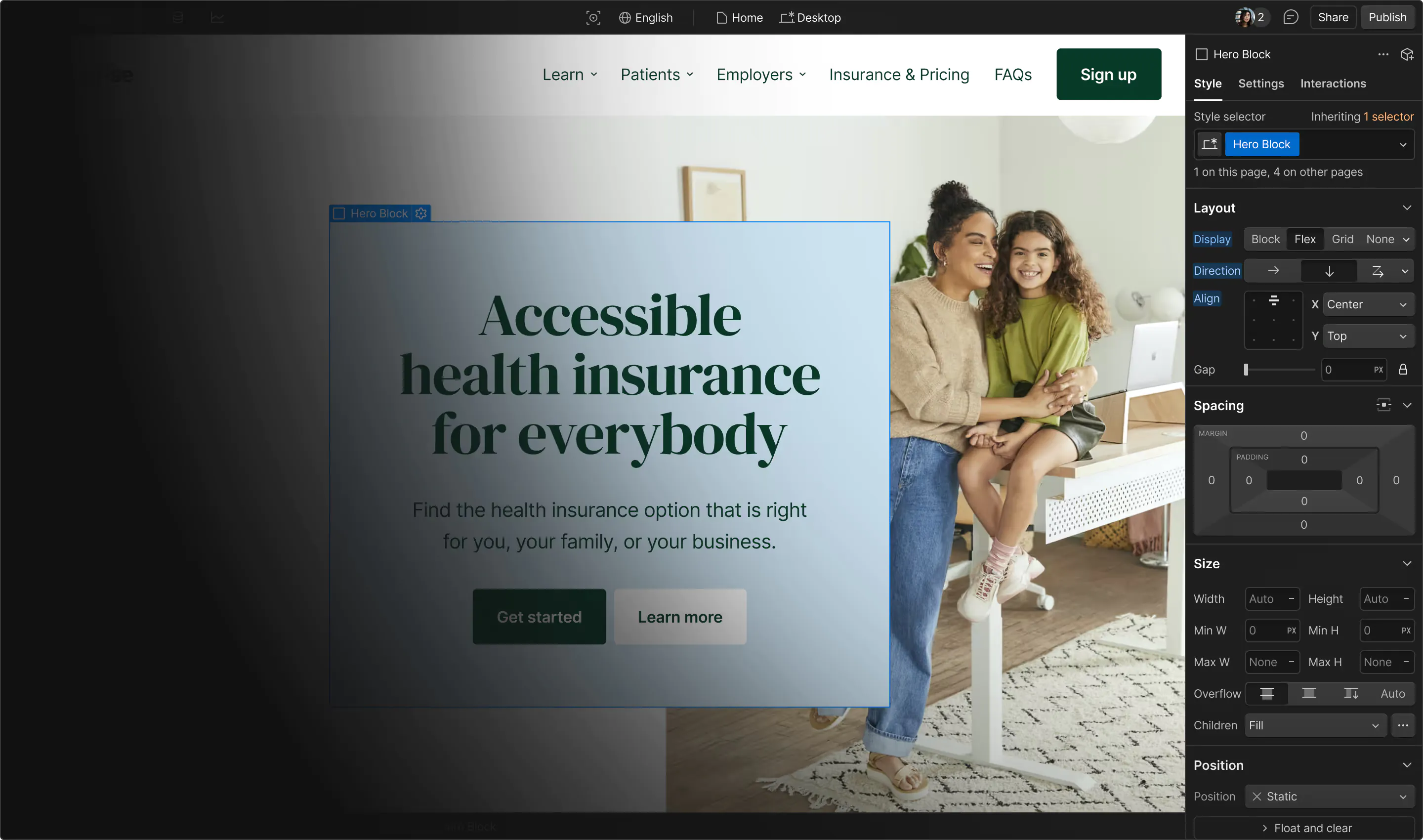

Our Webflow teams working on AI products have encountered this conflict while trying to build out Webflow AI products. Our LLM pipelines are complex and have many different points where they can fail. For example, in some cases we chain calls together. In many cases we have strictly defined output formats to enable the response to trigger logic in our system that actually accomplishes work on behalf of the user. Understanding how the system performs and why it is failing to produce a high quality result is a significant challenge for our team.

The challenge comes from the fact that the model’s response is probabilistic, not deterministic. One consequence of this is that while we still must write our typical integration and unit tests to verify that our system accurately handles a predefined set of responses, we also need a different category of tests which programmers are not used to maintaining and integrating into their workflows. This is where model evaluations come into play. In this post I’ll cover what model evaluations are and how we use them at Webflow.

Understanding evaluations

Model evaluations are tests that run a large number of predefined inputs through your system and contain a mechanism for grading the quality of the output. We run a large number of inputs because we aren’t trying to prove that the system is working correctly; rather, we measure either the probability of a passing response or the average quality of the response. In short, we’re moving from a binary measure of a test passing to a probabilistic measure. This testing mode is conceptually different from other tools that developers use to maintain systems such as unit tests, integration tests and end to end tests. Instead, model evaluations are a concept from data science where we evaluate how well a model performs on a wide range of tasks and use that information to understand how we should change the model.

In order to get a holistic view of how our system performs, we want to measure it along a few different axes. For example, since language models are built to interpret human language, we’ll often need to employ subjectivity in evaluating how well the model performs. We may also want to grade the output along a scale rather than a simple binary pass/fail criteria. Let’s go over a few types of evaluations:

Subjective vs. objective evaluations

In subjective evaluation, we use human judgment in determining how well the response suits the input. Subjective evaluations are often done using a multi-point rating system. For example, on our AI teams we want to understand semi-subjectively how good the output component looks. I’ll go into more detail on how we accomplish this later, but it’s important to note that we use a 3 point scale to capture this subjective judgment: “Succeeds”, “Partially Succeeds”, and “Fails”.

Objective evaluations (also called Heuristic evaluations) check that a specific true/false condition is being met. For example, if you require responses to be returned in a specific format you can write code logic that checks the format is correct across all of your generated outputs.

Creating subjective evaluations: human evaluations

The quickest way to get started with model evaluations is to generate a set of test inputs and have a human manually inspect and grade the outputs alongside your predefined evaluation criteria. While this requires minimal set-up and will yield excellent quality of results out of the gate, it’s extremely time consuming and can be expensive in terms of developer hours. In order to reduce the amount of manual work performed, We wanted to find solutions to automate the evaluation process.

Automating subjective evaluations: grading AI with AI

Objective evaluations are easy to automate, as you can typically write code that performs the check. Subjective evaluations are trickier. For this task we turn again to language models to subjectively analyze how well the response performs on some set of criteria. Luckily, large language models are surprisingly good at grading whether or not another model’s output has successfully accomplished a given task. These outputs may not always align 1:1 with human judgment , but in aggregate they are often good at spotting larger changes across a wide set of prompts. For example, automated tests have not been the most reliable for identifying trends for what specifically is causing quality scores to be low in specific outputs, but they typically are good at determining whether or not a change to the system is yielding a net positive result across the board. This often is because they lack the context for how every other output looks and are looking at single output in isolation.

In order to gain confidence in your automated scores, you can do AI evaluations and human evaluations side by side to gather data on how well your automated evaluations match your human evaluations. In practice you’ll usually run automated evaluations frequently while periodically conducting a human evaluation to verify that the ground truth is not far from the automated scoring. For example, at Webflow our best practice has been to conduct a weekly manual scoring and rely on automated scores for day-to-day validation of changes made by developers. We also rely on automated scores to detect unexpected regressions in quality.

Evaluations in practice

On our AI teams, we’ve begun using evaluations extensively, both within our development lifecycle and as a means of alignment across shareholders. Our problem when we first started development of Webflow AI projects was that we knew the quality of our feature was poor but lacked a language to discuss quality. Because our team was used to identifying software bugs, we tried using a Jira-based workflow to address language model quality problems. However, this doesn’t empower developers to know which problems occur most frequently or how much their fix has reduced the frequency of occurrence.

We’re hiring!

We’re looking for product and engineering talent to join us on our mission to bring development superpowers to everyone.

We’re hiring!

We’re looking for product and engineering talent to join us on our mission to bring development superpowers to everyone.