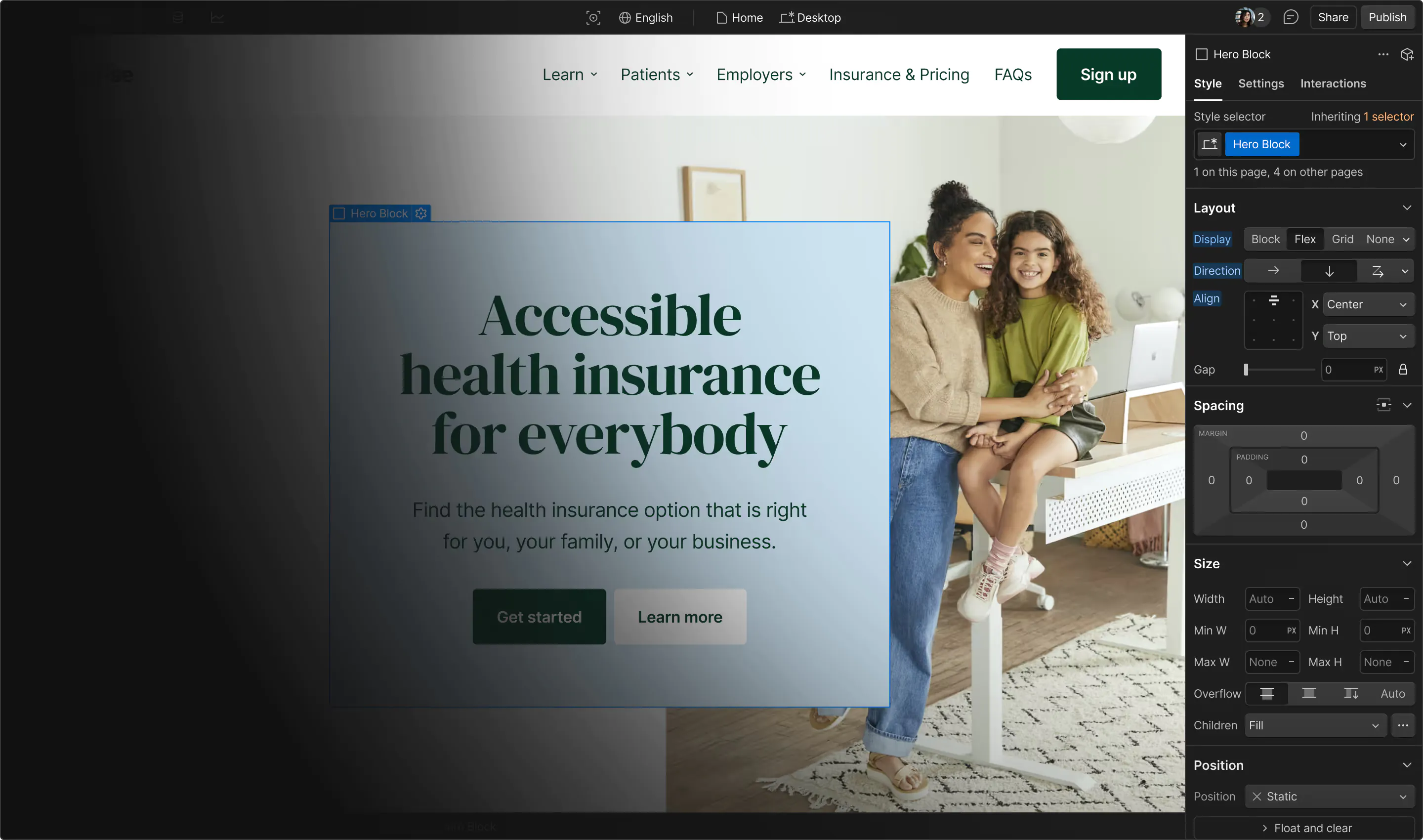

On February 28, 2017, the internet “had a sick day” as Amazon’s core storage service — AWS S3 — had its largest outage.

If you tried to use the internet that day, you might remember it, as the outage took down some of the largest websites on the internet, including Reddit, Netflix, Slack, and countless others.

Before that fateful day, Webflow Hosting had over 99.99% uptime lifetime, and hadn’t suffered a single significant outage since launch.

Unfortunately, because S3 is such a central component of Webflow Hosting, this outage also affected many websites hosted with Webflow (which we detailed in our postmortem). And that meant a lot of unfortunate events happened: businesses lost leads and sales, design pitches went sour, and designers lost valuable time.

We never want that to happen to any of you. Which is why we’re happy to say:

We learned a lot from this outage, and we’re taking comprehensive steps to make Webflow Hosting even more reliable, so it can scale for the years to come. We’ve got all the details on that for you below, but first:

Why S3? Or, how Webflow handles site hosting

You’ve always been able to publish your Webflow projects to a live domain, thanks to Webflow’s hosting stack. When we built the platform, we knew we’d want something that would scale with our growth. That meant looking for something in the cloud.

We chose S3 from the start because of its speed, reliability, and scale. In effect, Webflow could use S3 as a near-infinite file system to host all site assets, including HTML, JavaScript, CSS, images, and videos. It’s great at not only storing the files, but also at retrieving them very quickly. S3 has also proven very reliable, experiencing just a few hours of downtime in the past 8 years.

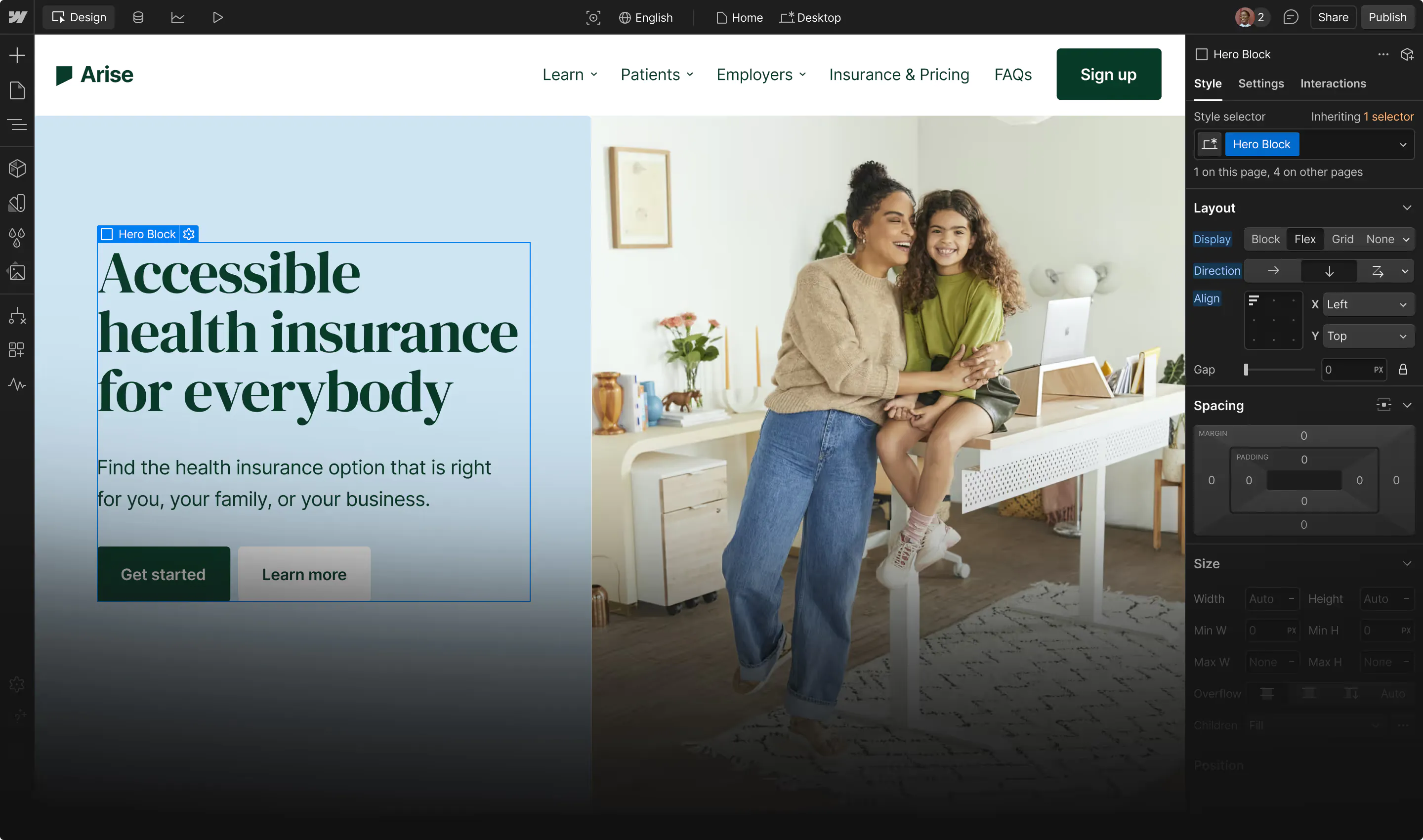

So how do we use S3? In essence, S3 works as a backend file system for all Webflow-hosted sites. Raw site assets are stored in S3 when you publish a Webflow site, then distributed through our Fastly CDN so all your visitors — no matter where they are — get to enjoy speedy page loads.

But when S3 goes down, that distribution chain breaks down. Or at least, it used to.

Here’s the steps we’re taking to improve our hosting:

Note: The rest of this gets fairly technical. If you dig that sort of thing, you’ll probably enjoy it. If you don’t, the TL;DR is that we’re taking a bunch of steps to improve our hosting service’s reliability and keep you from being affected by future S3 outages.

Learn flexbox the fun way...

Master the basics of flexbox in 28 increasingly challenging — and fun!— levels, without writing a line of code.

Step 1: Improving S3’s reliability

To safeguard against future S3 outages, we’re implementing multi-region redundancy and active-passive failover. Basically, that means we’ll be storing even more copies of your site’s content in our server network, so if one area fails, we can keep serving your site without a hitch.

Even when an entire AWS region becomes less efficient, or goes down entirely.

To help us get to multi-region S3, we’ve taken the following steps:

- We’ve copied billions of files over to another Amazon datacenter located in Ohio. (The main one is in Virginia.)

- We then set up a replication process to transfer new file uploads to the new (Ohio) datacenter as soon as they’re done uploading to the main (Virginia) one.

- We’ve deployed another cluster of web servers to use in case the main web server cluster is unavailable. (More on this later.)

Also, by configuring a DNS Failover Routing Policy, we’ll be able to automatically route traffic over to the new datacenter if our primary datacenter ever goes down. This will improve our redundancy considerably, since we’ll now be able to route traffic to a completely new datacenter, and in the future, several others in different regions around the world.

Step 2: Streamlining our devops

To date, our engineering team has been focused on building solutions that can scale automatically.

Given we’re in the unpredictable world of web hosting, where any number of our sites can experience a spike in traffic, our infrastructure has to be elastic to scale with the demand.

However, during the scramble caused by the latest S3 outage, we discovered that there were several improvements that we could make to our devops.

- First, we’re adding Terraform, an infrastructure coordination technology that will enhance the automation of deploying our hosting stack. During the outage, we realized that standing up servers still involved a couple error-prone, manual steps. Even though we use Chef to build, install, and configure many virtual instances on AWS EC2, provisioning them still required a few manual keystrokes.

- Second, we’re changing the way we’re building and launching virtual machines with Docker and Rancher. In the past four years, the complexity of Webflow hosting has increased drastically. We offer the most robust hosting service, as well as a variety of powerful features that enable thousands of businesses to run their online presence on Webflow. And due to the complexity we’ve added, our tooling did not cover certain use cases.

So in addition to focusing on S3 reliability, we’ve also started to transition our infrastructure to Docker, Terraform, and Rancher. Have no idea what these are? Read on.

Docker: A shipping container for our code

Docker is a new and novel way to package software into a standardized container that can be easily transported across different platforms and environments.

You can think of Docker as a shipping container. Shipping containers all look the same from the outside, and any ship, harbour, or crane knows how to operate one. Same goes for Docker.

With it, we can now package our code into a Docker container, and know that it will run on any computing environment that also supports Docker. This allows us to deploy our code easily and predictably to new servers at a scale that wasn’t previously possible.

Rancher: A crane to organize containers

Now that we have all these different containers running different parts of our code, we need a way to orchestrate how they work with each other. For that, we rely on Rancher. Rancher is the crane that organizes all the containers, so the right ones can be stacked in the right order and position. It also allows us to monitor the health of each container, and to make sure we have enough computing resources to provide each service.

Terraform: Charting the course

Terraform enables us to quickly provision and maintain our production environments.

This is essentially our container ship’s chart and instruction manual. It allows us to stand up completely new datacenters with our container fleet in different parts of the world in a fraction of the time. Since Terraform has a language that allows us to describe our infrastructure with code, it can talk to several different cloud providers to quickly and easily configure our infrastructure so it’s much more predictable and scalable. It also has the side benefit of making sure that engineers review all of our infrastructure changes, which means fewer developer errors, and better change-management procedures.

Looking ahead

Over the next year, Webflow will be investing close to a million dollars to make sure our managed hosting infrastructure is state of the art. Recent advancements in cloud technology are rapidly changing the web hosting world, and we want to make sure that every one of you (and your clients) can capitalize on its benefits.

We hope this article sheds some light on the investments we’re making in our hosting technology, so that Webflow Hosting remains the fastest, most reliable option for your business.