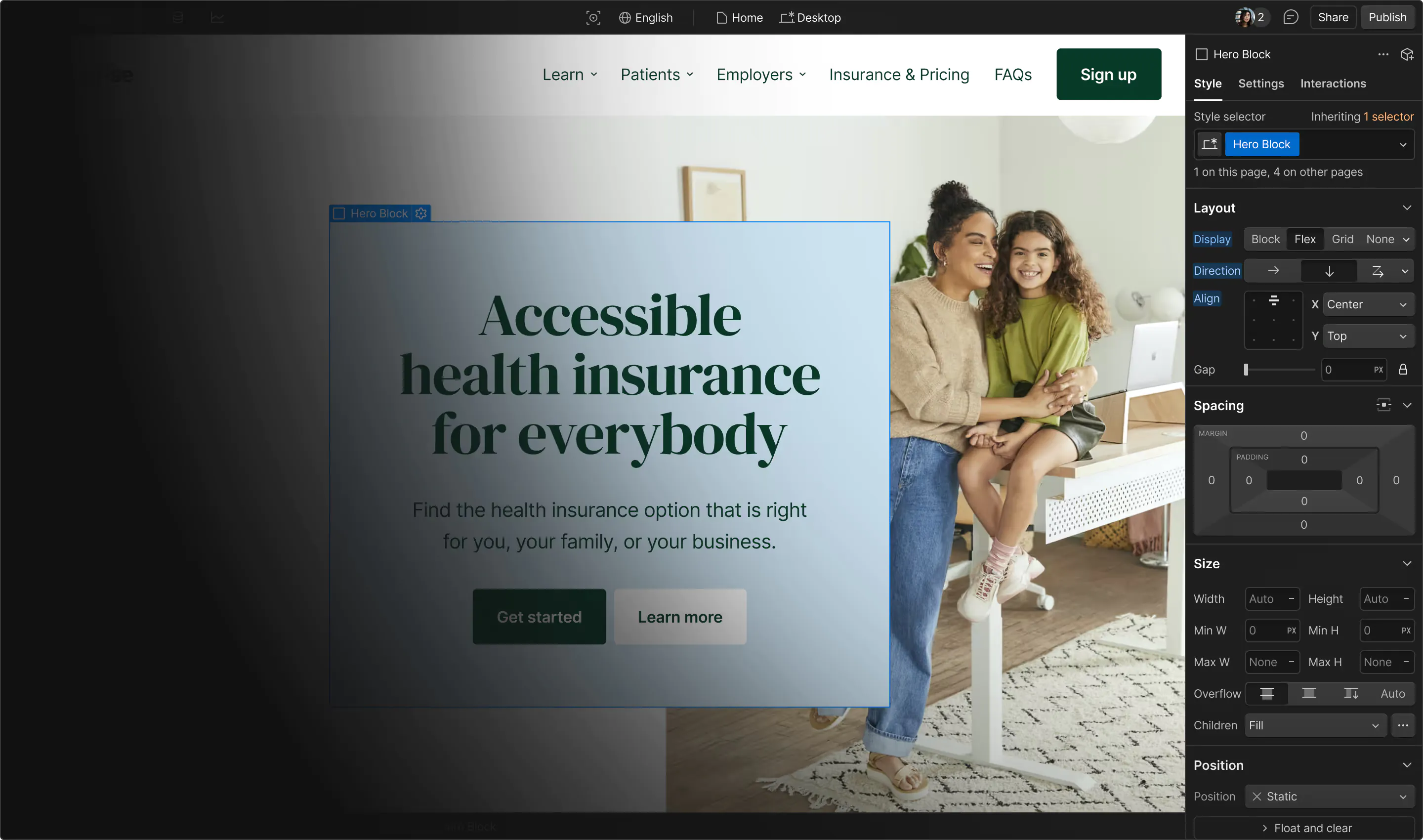

When thousands of builds a month pushed CI into our top three expenses, we saw an opportunity and rebuilt it for cost, scale, and resilience.

Webflow's Continuous Integration (CI) system is critical for our monorepo’s development process. As part of that process, we execute an extensive suite of unit and browser-based tests by dynamically sharding the suite across Buildkite jobs and horizontally scaling as needed to meet compute demand. Running tens of thousands of builds a month with Engineering continuing to grow at Webflow has meant that our pre-existing cost savings measures have been outgrown. For example, we utilize the Nx build system to optimize test execution in our monorepo, but our complex dependency graph limits its effectiveness. Additionally, our smoke testing suite is in its infancy, so we still run all of our 88000 (and counting) tests for every pull request test branch in our merge queue, which merges thousands of PRs a month.

As a result, the Delivery Loop team’s CI costs are substantial, ranking third in overall spend within engineering. This high cost is primarily driven by the volume of EC2 instances required to execute our test suite while keeping the feedback loop short for engineers. A full monorepo Buildkite pipeline execution (a.k.a. build), which includes building our code for testing and then running the tests, can take over 30 machine-hours. We utilize over 9000 vCPUs and 36000 GiB of memory at peak.

How can we significantly reduce our CI costs without sacrificing system reliability or runtime, and without introducing any manual work for engineers? We undertook a targeted optimization effort, focusing on key areas like EC2 instances, AWS Config settings, and EBS volume sizes. According to Cost Explorer, these areas were our account’s top three sources of spend. Our efforts achieved a 33% reduction in amortized monthly CI costs while maintaining performance and reliability.

Leveraging Spot Instances

We significantly reduced our CI costs by transitioning most of our workloads to EC2 spot instances. While we had an active EC2 Instance Savings Plan, it locked us into a specific instance family which discouraged experimentation with other kinds of instances. Furthermore, the savings plan’s coverage was not sufficient for typical weekday compute needs and wasteful on the weekend when there was little compute needed. Spot instances offer flexibility and substantial cost savings, up to 60% compared to on-demand instances, which directly addresses our team's number one cost driver: EC2 spend. The majority of our compute workload consists of testing jobs, so we gradually moved them over while leaving critical jobs, such as our target determinator job, alone. This move required addressing the inherent risk of spot instances being interrupted — we observed a 13% spot interruption rate for the month of April. We handled the risk in two key ways: building resilience into the system and carefully managing the rollout.

Designing for interruptions

To ensure our CI pipeline remained stable despite potential spot instance interruptions, we implemented robust retry mechanisms and test checkpointing. Our approach here mirrors successful strategies used by other companies.

- Use Buildkite's automatic job retries. This feature allows us to specify that jobs interrupted by a spot instance being reclaimed should automatically restart, preventing manual intervention by engineers at Webflow. Since interruptions can occur at any point in time and a job could unluckily be interrupted multiple times, we specified a limit of 5 retries.

- Add test checkpointing. This more complex solution allows us to save progress on test runs. If a spot instance is interrupted, we can resume testing from the last saved point rather than restarting the entire file. We leveraged a Redis instance in our CI cluster’s VPC as a fast, temporary datastore for this checkpointing information. This choice was driven by the large volume of tests, high daily build count, and the temporary nature of the data. One challenge we faced here was having to implement checkpointing four different times for the four test runners we use within our monorepo.

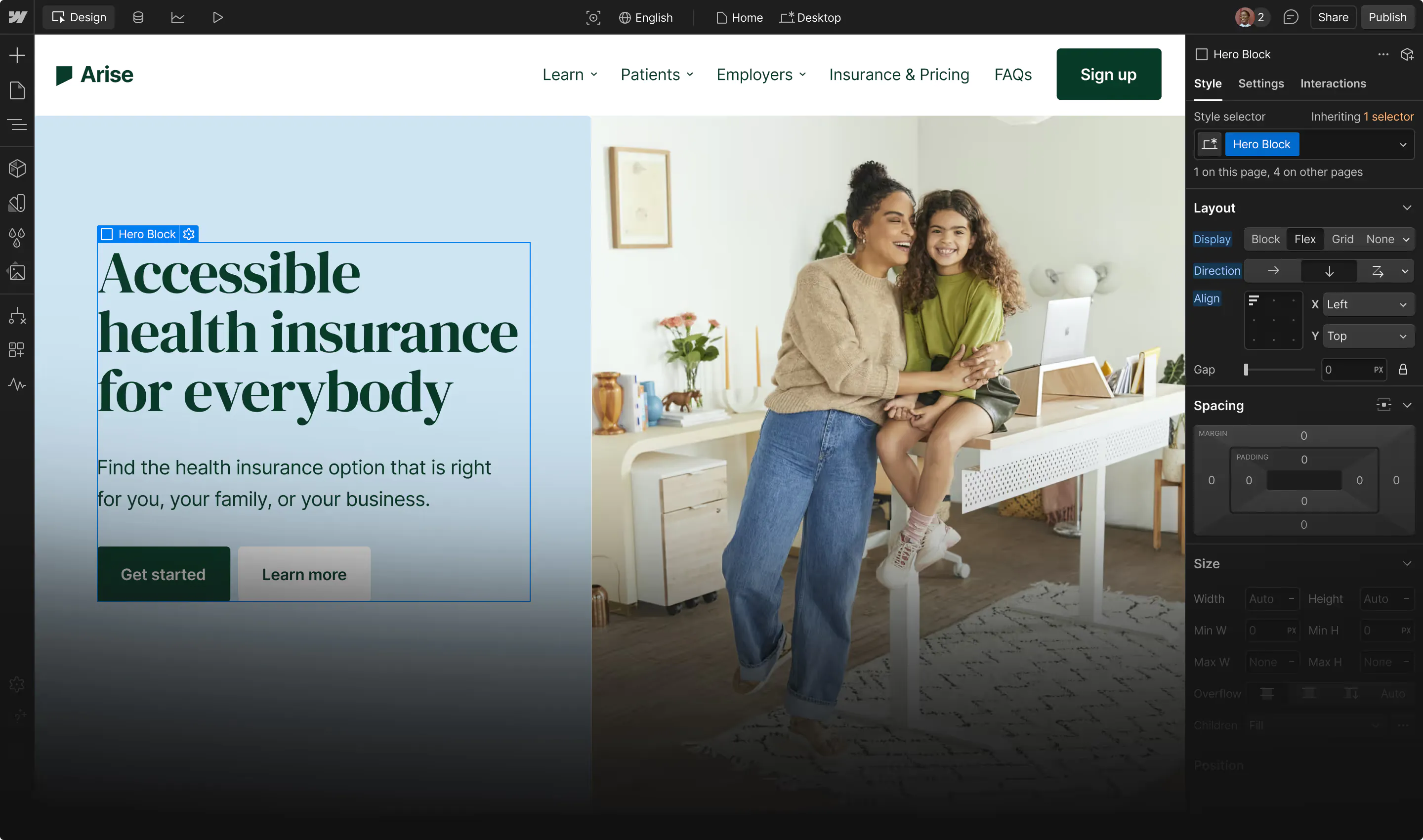

A gradual, monitored rollout

Finally, to avoid disruptions to our engineers during this transition, we carefully managed the rollout of spot instances.

- We selected the price-capacity-optimized allocation strategy for Spot instances. This strategy results in fewer spot interruptions than a price-optimized strategy at an increased cost. While test checkpointing reduces the time cost of a spot interruption, there is still fixed overhead from our agents needing to clone branches, fetch missing Docker images or node_modules directories from our cache, and start up services. Initially, we did not specify enough instance types for the strategy, so we ran into capacity constraints because EC2 could not provide enough spot instances. We saw CI performance regressions until we manually fell back to on-demand instances. To resolve the problem by giving the allocation strategy more options to work with, we used the Instance Advisor to add similar instance types with the same vCPU count and a low interruption rate. In some cases that included network-optimized, disk-optimized, or memory-optimized instance types.

- We ran initial experiments in a staging version of our CI environment. We started with interrupting individual instances across multiple job types, then moved on to running aggressive interruption experiments using AWS Fault Injection Service (FIS). These tests allowed us to identify and address issues with our automatic retry configuration and dynamic pipeline generation code without affecting production builds.

- For changes requiring production-level load testing, we implemented a lightweight feature flagging system. This system enabled us to control the use of spot instances without requiring code changes to be merged into PR branches. Using FIS did not accurately represent the real-world spot interruption rates we would see by allowing autoscaling for our spot instance queues up to their maximum instance counts. If we saw an unhealthy amount of interruptions, we could retreat to our on-demand queues which were still in place.

- We then followed a gradual rollout strategy, starting with draft pull requests, then expanding to all pull requests, and finally enabling them for the merge queue. This phased approach minimized the risk of disruptions and gave us time to monitor the system via CloudWatch and Buildkite advanced queue metrics. We adjusted the system and built up teamwide knowledge along the way:

- We wanted to cap the amount of increased spending resulting from doubling the amount of queues and ASGs. Across each phase of the rollout, we gradually adjusted max instance counts by decreasing the max for our on-demand auto-scaling groups (ASGs) and increasing the max for our spot instance ASGs. In other words, we were temporarily overprovisioning the system, so we reduced where the overprovisioning was as compute needs shifted to spot instance queues.

- Prior to and during the spot instance rollout to draft PRs, we created a new dashboard, added automated alarms for spot interruption rates and long Buildkite job queue times, and wrote on-call runbooks to manage the system. We needed whoever was on-call to be able to handle spot capacity constraints, which was addressable by either adjusting the percentage distribution of the ASG between spot and on-demand or adding base on-demand capacity. We typically chose the latter option and found it to work well in practice for our situation. We eventually stopped seeing capacity constraints by adding more instance types to the mix as described above.

- After rolling out to all PRs and due to the spot interruption rates we observed, we made the decision to implement test checkpointing starting at the file-level with Buildkite metadata as the checkpointing data store. We deemed the metrics acceptable enough to not roll back to draft PRs only. Following that implementation, we saw improvements in our metrics, enabling the smooth rollout of spot instances to the merge queue. We then followed up with suite-level or test-level checkpointing depending on the runner and moved to Redis to store test results to handle the increase in volume of data.

This methodical approach to adopting spot instances and handling spot interruptions allowed us to capture significant cost savings without compromising the stability and reliability of our critical CI pipeline.

Exempting AWS Config resource types

To further reduce costs, we optimized our AWS Config settings, which were generating unnecessary overhead due to our high volume of short-lived EC2 instances. AWS Config tracks changes to AWS resources, which is crucial for security and compliance. However, for our CI pipeline, we create thousands of temporary EC2 instances each day, leading to a massive amount of tracking data and associated costs. With the increase in instance count due to our rollout of spot instances, we also saw increases in Config costs. We needed a way to filter what was being recorded, without creating a security hole. Thankfully, these adjustments were relatively straightforward.

We addressed this issue by selectively exempting certain EC2 resource types from AWS Config recording.

Certain resource types such as EC2 volumes were not critical for our security monitoring, so we created fine-grained exceptions to exclude them. We added exceptions, checked the Cost Explorer after a day, and made additional tweaks to the settings until we saw significant cost reductions here. To counteract some cost-associated compliance rules not being able to function without these data being recorded, we’ve chosen to periodically check the Cost Optimization Hub.

As a result, we eliminated unnecessary costs without compromising our overall security posture.

Shrinking our EBS volumes

We significantly reduced our CI costs by right-sizing our EBS volumes, which were previously over-provisioned. This was a surprisingly large area of potential savings, as many of our EC2 instances had unnecessarily large attached volumes. By analyzing our usage patterns and metrics via post-job logging, we identified opportunities to reduce volume sizes without affecting performance.

For our EC2 instances with local storage, we optimized the volume size to match the size of our base AMI, plus a small buffer.

- We played it safe here and chose the minimum EBS volume size and then added some extra space "just in case."

- We also ran into inode exhaustion issues with the default ext4 filesystem due to the large number of small files that is typical of `node_modules` directories. To resolve this, we switched to the XFS filesystem for our SSDs, as it is better at handling a high number of inodes and generally has better performance for our workloads.

For our other EC2 instances, we conducted a detailed analysis and discovered that the attached disks were significantly over-provisioned.

- Metrics showed that many volumes could be reduced by roughly half. We took a cautious approach and cut back on all volumes, while making sure to monitor usage afterwards.

- We also optimized our extensive `node_modules` caching system, improving its handling of disk exhaustion. This optimization involved refining our cleanup policies to ensure we efficiently managed space by iteratively cleaning out old cache entries and removing old Docker volumes whose cleanup was previously not needed.

By addressing these issues, we significantly reduced EBS volume costs while maintaining our overall system performance.

What’s next?

Our CI cost optimization journey doesn't stop here. We've identified several exciting areas for continued improvement and potential for even greater efficiency gains.

Graviton Adoption: We evaluated AWS Graviton instances and observed promising cost-performance benefits. However, we encountered some incompatibility issues with parts of our CI stack and had to wrap up the project before we were able to fix them all. We plan to revisit Graviton instances later in the year. We're confident this will unlock further cost reductions without sacrificing performance.

S3 Lifecycle Management: We have S3 buckets storing data that have grown nonstop. Simply deleting all old data is not feasible due to how we handle site assets and snapshots since there’s no common prefix we can use to apply lifecycle rules. We should be able to enhance our asset and snapshot copying scripts to use S3 Object Lock in governance mode on these objects then turn on lifecycle rules, which would enable us to save tens of thousands of dollars per year.

By tackling these next steps, we aim to further refine our CI infrastructure, driving down costs and optimizing our resources.

Conclusion

As a direct result of these optimization efforts, we’ve achieved a significant 33% reduction in amortized monthly CI costs when comparing end-of-Q4 spend last year to end-of-Q1 spend this year. To break the reduction down a bit further, we saw a 42.7% reduction in EC2 instance spend, an 89.6% reduction in Config spend, and a 42.4% reduction in EC2 other spend. We’re already seeing these savings reflected in our budgets and expect that reduction to grow even further in Q2 as the full impact of our more recent changes takes hold.

But these numbers represent more than just cost savings. For Webflow’s engineers, this means a faster, more reliable CI pipeline as my team reinvests our savings into enhancements elsewhere. It means less time waiting for builds and tests to complete as we can horizontally scale further than before, and more time spent building features and solving complex problems. With these optimizations in place, we’ve laid a foundation for future growth and innovation without being hindered by excessive CI costs. The positive impact of these changes will ripple through our daily workflows, enabling us to deliver higher-quality software more efficiently. We’re excited about the momentum we’ve gained and the potential it unlocks for our team and our users.

We’re hiring!

We’re looking for product and engineering talent to join us on our mission to bring development superpowers to everyone.