Who are you? What ‘AI Assistant’ are we talking about?

👋 Hello, I’m Tom — I’m the tech lead for Webflow’s Applied AI team. Our team’s mandate is to accelerate the development of AI-based features in the Webflow product, and recently we shipped our biggest AI feature yet: the AI Assistant.

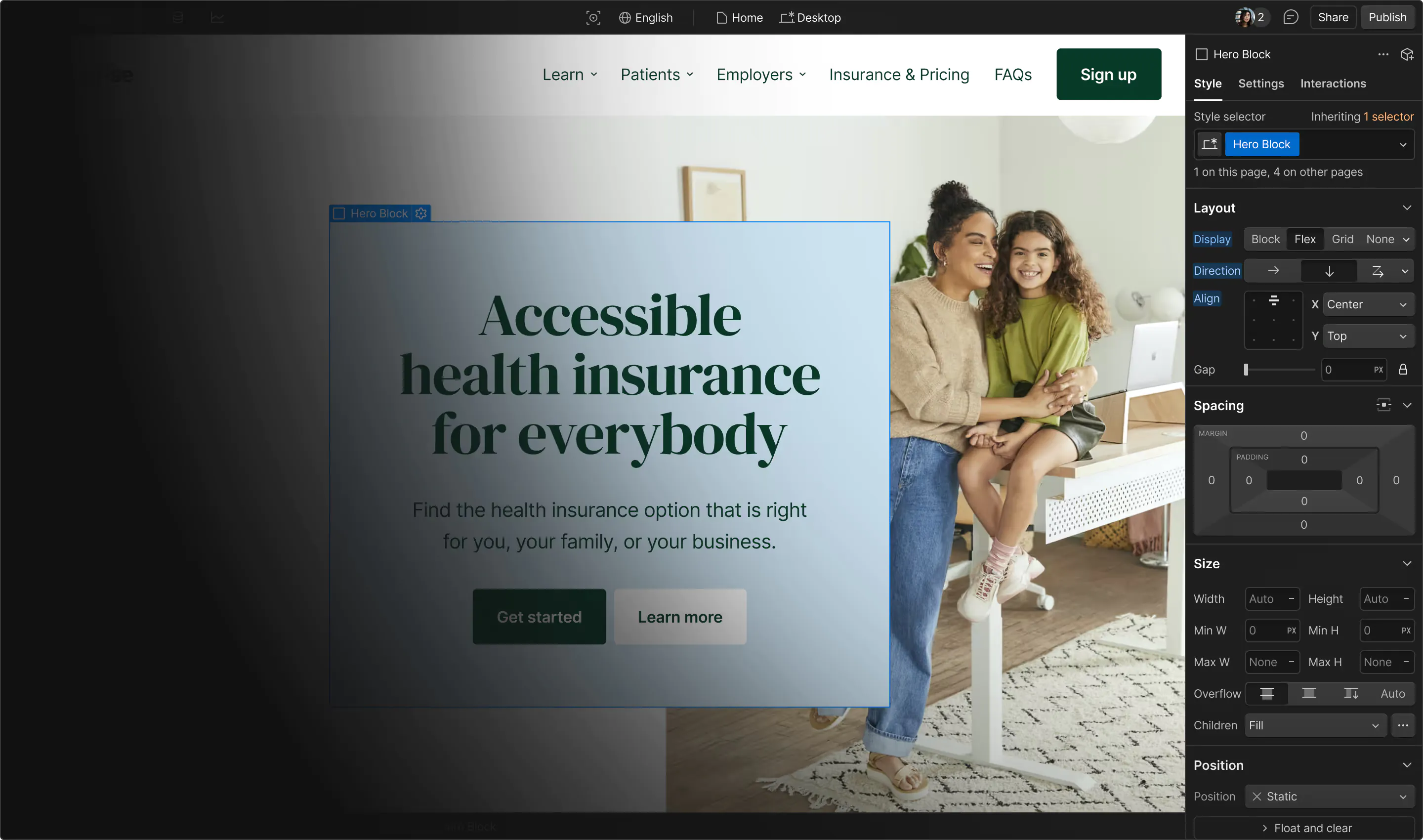

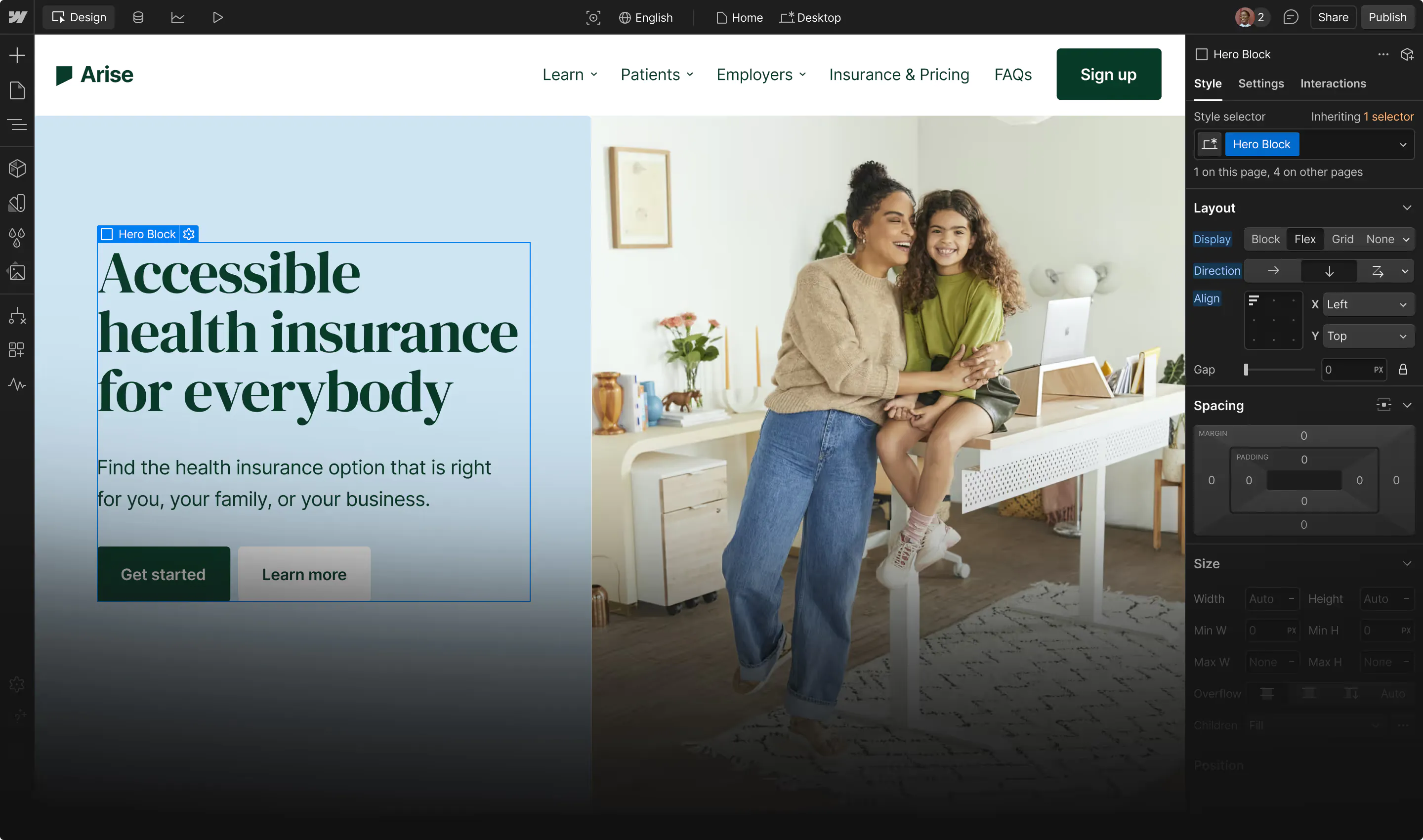

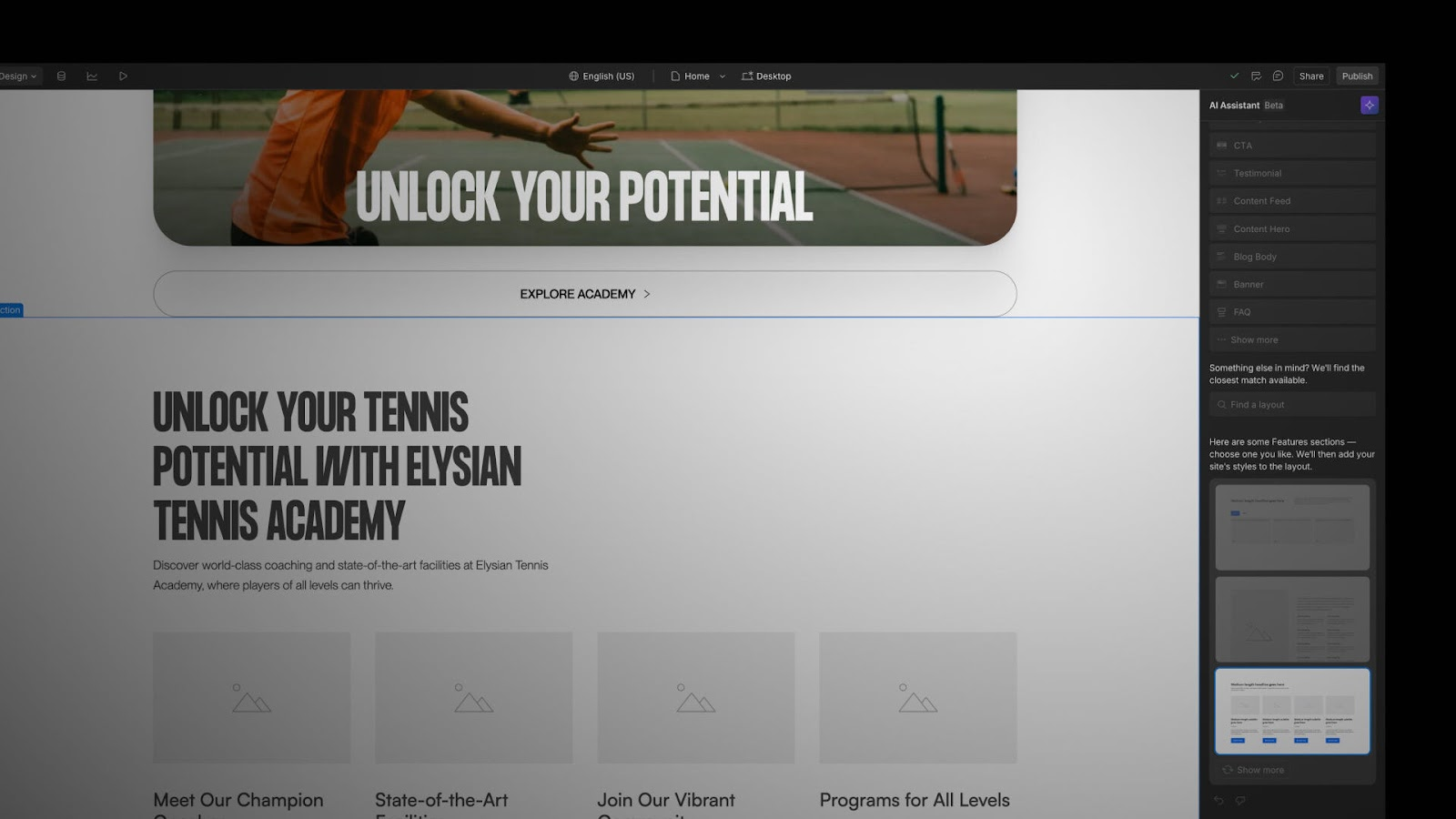

AI Assistant will eventually have all sorts of “skills,” but today its main skill is creating new sections in an existing Webflow site. It lives in the right side of the Designer and looks like this:

I’m writing this post after our public beta launch at Webflow Conf 2024. It felt like a good time to reflect on the ups and downs of developing an AI feature at Webflow.

The AI Assistant launch was great!

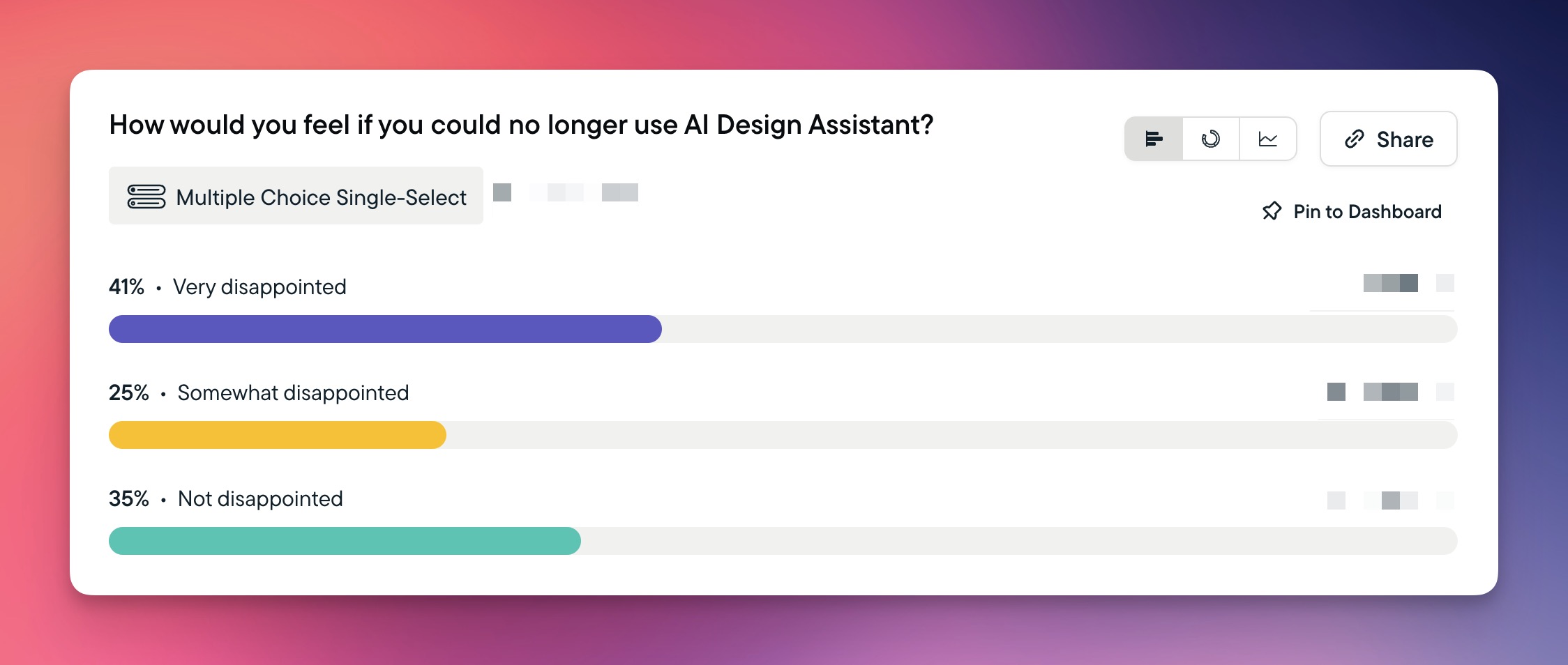

This post spends a lot of time exploring the challenges we faced while developing AI Assistant, but – spoiler alert – I want to say up front that this story has a happy ending. The AI Assistant launch went off without a hitch and our post-launch product-market fit score (41%) surpassed all expectations.

Pulling this off required herculean efforts from the newly-formed Applied AI team. I'm forced to use the unspeakably cringey “flying the plane while we’re building it” metaphor — this team had to figure out so many new things about AI product development while at the same time tackling an extremely ambitious AI product.

As a result of this team’s efforts we’ve launched the AI Assistant and its first “skill,” section generation. In addition, we’ve accumulated the AI-specific infrastructure, processes, and institutional knowledge that will accelerate the addition of many more AI Assistant skills in the near future. A smashing success all around!

But we got sucked into many “AI traps”

Although it had a happy ending, the AI Assistant story also had its dramatic setbacks. And while all software projects have setbacks, I couldn’t shake the feeling that this project had unique setbacks — things that seemed specific to AI projects. The typical advice around software development, especially around agile software development, seemed so much harder to apply to this project.

I kept coming back to four “AI traps” that seemed to constantly nudge this project away from quick, iterative, experimental development toward large, risky, waterfall-style development. And while I think there were other factors that were outside our control (e.g., we simply couldn’t have built today’s AI Assistant using 2023’s state-of-the-art models) — these traps explain a good chunk of our AI Assistant delays.

Trap 1: Overestimating current AI abilities

It’s very tempting to assume that we’re about five minutes away from an AI apocalypse. Every morning I wake up to a deluge of Slacks/emails/Tweets announcing new state-of-the-art models, trailblazing AI tools, and magical demos. Even the AI detractors are usually in the “AI is too powerful” camp.

While the detractors might be right in the long term, right now AI is often very stupid. For example, GPT-4o still thinks that white text is readable on white backgrounds:

“The .paragraph class provides a white color for the paragraph text within the cards, ensuring readability against the white background of the cards.”

— Real GPT-4o response

This tendency to overestimate the current technology slips into Webflow’s roadmap planning. At many points during AI Assistant’s development we said stuff like:

- “…the quality scorecard needs to be 70% green before the April private beta launch…”

- “…let’s just train a model on [insert hideously complex domain here]…”

- “…it’ll take us around 5 engineer-weeks to teach the AI Assistant to apply existing styles…”

All of these claims had some supporting evidence, like demos, proof of concepts (PoCs), or maybe just saved ChatGPT conversations. But in every case we assumed that if we caught a whiff of a successful output from a large language model (LLM), we could eventually coax that same LLM to produce perfect outputs for every edge case.

It turned out we were extremely wrong about all of the assumptions above. It was way more challenging than we thought to improve the scorecard. Training models is slow, expensive, and difficult to do for broad use cases. And we’re still trying to improve how the AI Assistant applies site styles.

This tendency to overestimate AI abilities meant that our agile-looking roadmap with bite-sized goals — e.g. ”we’ll ship AI-generated gradients next week to see if users like it” — turned into a months-long slog to produce features that we weren’t sure our users even wanted.

Trap 2: Demo enthusiasm

This is the trap I fell into the most often, because I love demos.

And — to be clear — I think demos are a crucial part of developing AI features. But it’s far too easy to see a demo of “working code” and think, “This feature is basically launched! We just need to add some University articles, update some copy, and bing bang boom let’s SHIP IT!”

But here are a few things that make demos not shippable:

- They only demo the “happy path.” I spend more time talking to the AI Assistant than I spend talking to my wife, so I know exactly the right phrases to whisper in its ear to get it to work. This makes my demos look pretty good! But real users are very likely to use language that the AI Assistant doesn’t like, and will therefore have less success.

- They only demo the successes. This is the whole point of Loom’s “restart” button: if the demo gods abandon me in the middle of the demo I can just hit “restart”, and you don’t get to see the 50 failed attempts before the one that worked.

- They only work in special cases. For a while, the AI Assistant only worked on blank sites. Then it worked on a single template. Then it worked for a few more templates. But if you just stumbled upon a random demo you might think, reasonably, that the AI Assistant worked everywhere!

- They rely on a technology we can’t actually use. I did a whole bunch of demos that relied on TailwindCSS to create sections. These demos looked great, because GPT is a world-class expert at using TailwindCSS (since there are loads of great Tailwind examples in its training data). But the AI Assistant can’t just add weird Tailwind utility classes to everyone’s Webflow site, so these demos didn’t really mean much for the actual AI Assistant.

All of these “details” mean that the cool AI demo you just watched is a great starting point for more investigation but not an invitation to begin roadmap planning. All too often we jumped from “cool demo” to “okay the marketing launch is planned for April 4.”

We’re hiring!

We’re looking for product and engineering talent to join us on our mission to bring development superpowers to everyone.

Trap 3: The marketing moment

Speaking of marketing… what’s that huge gray thing looming in the corner over there?

Elephant in the room:

Shipping an AI product isn’t only about solving customer problems, it’s also about marketing.

In the last couple years there has been enormous pressure on tech companies to ship AI features. No company wants to be “left behind” — still making carriages in a post-Model T world — so they scramble to ship something AI-related.

Amid this pressure, many tech companies quickly launched half-baked AI features that were universally mocked. This is a family blog so I won’t name names, but I’m sure you’re familiar with some of the botched AI launches of the past couple years.

I don’t know if any single person at Webflow ever said this explicitly, but our emergent behavior can be summed up as a reaction to these failed AI launches. It’s as if we said:

“Dang, we really need to launch an AI product to show the world we’re an AI company. But all these recent AI launches are pretty embarrassing, right? We should build an AI feature, but we’d better be sure it’s perfect and awesome before we launch it.”

— Webflow’s collective consciousness

And I think this was one of our biggest mistakes. This sort of thinking was crystallized into these product requirements:

- It has to use AI

- It has to be press-worthy

- It has to be nearly perfect before showing it to anyone

This leads to very waterfall-style development. We can’t cobble together a quick MVP and release it to a bunch of users, because then they’d make fun of us! And we can’t ship something small, because then we can’t have our splashy marketing moment.

And this flawed reasoning dovetails perfectly with the sunk cost fallacy, which says:

“Holy smokes, we’ve spent a long time on this project—it’d better be really awesome when we launch it!”

— Webflow’s collective consciousness, again

All of this led to a long period of pre-launch development on AI Assistant. And although I think that development actually went pretty well, it would’ve been much easier to make product decisions if we had launched something smaller, sooner.

Trap 4: Moat-driven development

Everyone knows that software is all about moats. You can buy an Airbnb clone for under $2k, but Airbnb itself is worth $86 billion — and most of that delta is because of its moat, in the form of its very-difficult-to-replicate two-sided market.

So if we want Webflow to become an industry leader — a la Airbnb — we need to build a moat, right? And an AI-powered moat would be even better!

I think this is reasonable logic — we should always be on the lookout for possible moats we can build to put distance between us and our competitors. But I’m wary of “moat-driven development,” where we start with the moat and then try to back ourselves into a beloved product.

Ideally, we’d build products at the center of this Venn diagram:

But this is hard! It’s pretty much never clear up front where a particular product will fall in this diagram. So the danger of “moat-driven development” is that you start with something you know is in the blue (“moat”) circle, and then you sort of flail around trying to find adjacent parts of the solution space that overlap the pink (“user”) and yellow (“AI”) circles. But if this doesn’t work out, you’re left with a totally useless moat.

Some examples of moat-driven development:

- “Let’s train a model from scratch to do [something really cool] — then we can figure out what product to build on top of it.”

- “Let’s use fine-tuned models instead of off-the-shelf models to do [something really cool] — then we can figure out what product to build on top of it.”

- “Let’s build our own [insert expensive infra here] — then we can figure out what product to build on top of it.”

Obviously nobody says the second part out loud, but it’s implied when the first part is suggested without reference to any particular user problem, or without a discussion of other (cheaper, better) solutions to a user problem.

And the thing about moats is that — pretty much by definition — they’re very expensive to build. If it was easy to build a moat, then everyone would do it, and then… it wouldn’t be a moat! So starting with the moat means investing a lot of time and money into a project that might never turn into a useful feature.

To me, it seems way safer to start by investing in features you know are in the pink (“user”) circle, and then try to see which of those things, if any, you might be able to nudge in the direction of the yellow or blue circles. Because even if you end up investing in something solely in the pink circle, you’ve still built a useful feature.

But we should still be “moat aware.” As we stumble through the solution space of features to build, we should always be looking for features that users want and might have moats.

This sort of “natural” moat-finding has already happened a couple times with the AI Assistant:

- Building natively. As mentioned above, building an AI demo that spits out code in a popular framework (e.g. Tailwind) is relatively easy. But these demos are constrained by (1) the limits of that framework and (2) the lack of access to site context (like classes, variables, and components). But by building a native AI Assistant within Webflow, we’ve created a tool that’s much more useful than the myriad framework-based UI generators.

- The Webflow Library. LLMs are bad at UI design. That’s why all successful “generate UI with AI” features have relied on pre-built components or UI libraries. But these products are only as good as their libraries, and building proprietary design libraries is expensive and slow. But we’ve already done it! Copying the AI Assistant would require copying our design library, which is protected IP.

In short: chasing moats can lead to long waterfall-style development of infra that we don’t know users want.

Avoiding the traps

Okay enough doom and gloom — let’s talk about the right way to build AI features!

How to avoid AI traps

- Spend more time on product and engineering experimentation.

Engineers want to build cool prototypes using new tech, and product managers want to solve meaty customer problems. But neither has all of the info needed to discover where that Venn diagram overlaps. The solution? We should allocate ample experimentation time for engineers to build quick PoCs that they can show to product managers, who can suggest refinements to those PoCs based on customer needs, etc. And we should spend more time in the experimentation phase making sure that the PoCs can eventually be turned into production-quality features. - Treat demos as support for more investigation, not roadmap planning.

Demos are invaluable for communicating possible features to all stakeholders. But a demo is not proof that a feature is shippable. We should only exit the “experimentation phase” when we have other evidence that a proposed feature will meet our quality bar. - Ship MVPs — don’t chase a marketing moment.

AI features tend to have far more degrees of freedom than traditional software features. A user can type anything into that chat box, and you’re kidding yourself if you think you can predict what they’ll type before you launch. That’s why launching early is crucial for AI features — there’s no way to guess what users will do. Luckily, the era of “AI marketing moments” seems to be fading — good riddance! - Start with the user problem — the moats will come later.

We should all be “moat aware” — i.e., we should be on the lookout for possible moats adjacent to the current feature — but we should be very wary when someone suggests building a moat without referencing a particular user problem and the other ways of solving that problem.

Easier said than done!

Just to reiterate: I fell for all of these traps, often multiple times. I’m writing them down here not to point any fingers, but to help other product teams avoid making the same mistakes as they build the next wave of AI-based features.

I am incredibly proud of the team that got us here — and the product we ultimately shipped — and I can’t wait to use some of these lessons to accelerate Webflow’s future AI product development!

We’re hiring!

We’re looking for product and engineering talent to join us on our mission to bring development superpowers to everyone.