A well-crafted robots.txt file helps you guide the behavior of search engine bots.

Your website serves as your most powerful inbound marketing asset, so it’s crucial to strategically manage which pages search engines can access. With robots.txt, you can block non-essential pages from search engine crawlers to ensure your most valuable content receives attention and indexing priority, maintaining a focused, high-quality presence in search results.

A robots.txt file provides directives to give you control over how different web crawlers explore your company’s site. Read on to learn how crawlers work, what they look for in a robots.txt file, and how to write them.

What’s a robots.txt file?

These plain text files go into a site's root directory and tell search engine crawlers like Googlebot and Bingbot which pages to crawl and which to ignore.

Depending on traffic volume, search engine bots may try to crawl your site daily, which could bog down the web server and slow load times. But with a robots.txt file, you can identify pages and resources crawlers should skip, reducing the number of requests your company’s web server needs to process.

Why is robots.txt essential for SEO?

Web crawlers can take up a lot of processing power, especially if several are simultaneously exploring your company’s site. A robots.txt file gives you some control over how they crawl your content, which can result in the following SEO benefits.

Optimized crawl budget

Search engines assess a site’s authority, speed, and size to determine how long they’re willing to expend resources crawling it. Use your robots.txt file to exclude unnecessary files so crawlers will discover important pages during their limited time on your site.

Blocked duplicate content

Duplicate or redundant content can lead to canonicalization issues where search engines can’t determine which page is the best source of truth. Without that information, they can’t select the best result to show for a related search query. Excluding all but the most accurate content keeps your site from being penalized with lower positions on search results pages.

Conserved processing power

Crawlers are extremely well-optimized to affect your web server as little as possible. But the impact is still measurable, and a robots.txt file gives you some control over how much these crawlers impact your site’s performance.

For example, your robots.txt file can exclude large video files from being crawled so your web server doesn’t need to waste power serving them to a bot. Instead, your processors can focus on loading pages for site visitors, which Google and other search engines take into account when ranking pages.

How does a robots.txt file work?

Robots.txt files establish rules that web crawlers check before exploring a site. You organize these rules into groups that apply to any or all crawlers, referred to as “user agents,” in the file itself. Set rules for specific user agents or use an asterisk (*) to refer to all crawlers (except those that ignore the asterisk, like AdsBot-Google and AdsBot-Google-Mobile).

With robots.txt files, you can define guidelines for anything on your site, such as:

- Web pages — HTML, PDF, and XML files that you don’t want bots to crawl

- Directories and subdirectories — Whole sections of your site you want crawlers to avoid

- Media files — Unused or unimportant images, videos, or audio files

- Resource files — Scripts or style files that don’t offer valuable information to search engines

Robots.txt syntax

Each group starts with one or more user agent, followed by all the rules you want them to follow. Everything is case-sensitive, so make sure to double-check your writing.

The following group would tell Google’s robot, Googlebot, not to crawl a page called “example-file.html”:

User-agent: Googlebot

Disallow: /example-file.html

Here are all the directives you can use in a robots.txt group:

- User-agent — The crawler(s) you want the group to target

- Disallow — Anything you don’t want the user agent to crawl

- Allow — Anything you want the user agent to crawl, even within a disallowed directory

- Sitemap — A link to a file that lists all of a website’s URLs

- Crawl-delay — How many seconds the user agent should wait between crawling files (although most search engines, including Google and Yandex, ignore this directive)

Here’s a robot.txt file example that creates rules for Googlebot and Bingbot specifically:

User-agent: Googlebot

User-agent: Bingbot

Disallow: /no-crawl/

Allow: /no-crawl/except-this-one.html

Crawl-delay: 3

Sitemap: https://www.example.com/sitemap.xml

This robots.txt specifies that Googlebot and Bingbot should not crawl the /no-crawl/ subfolder, except for a specific file, except-this-one.html, within that directory. The Crawl-delay directive sets a 3-second pause between each request to prevent server overload, and the Sitemap directive provides the location of the sitemap, which helps search engines understand the site structure more efficiently.

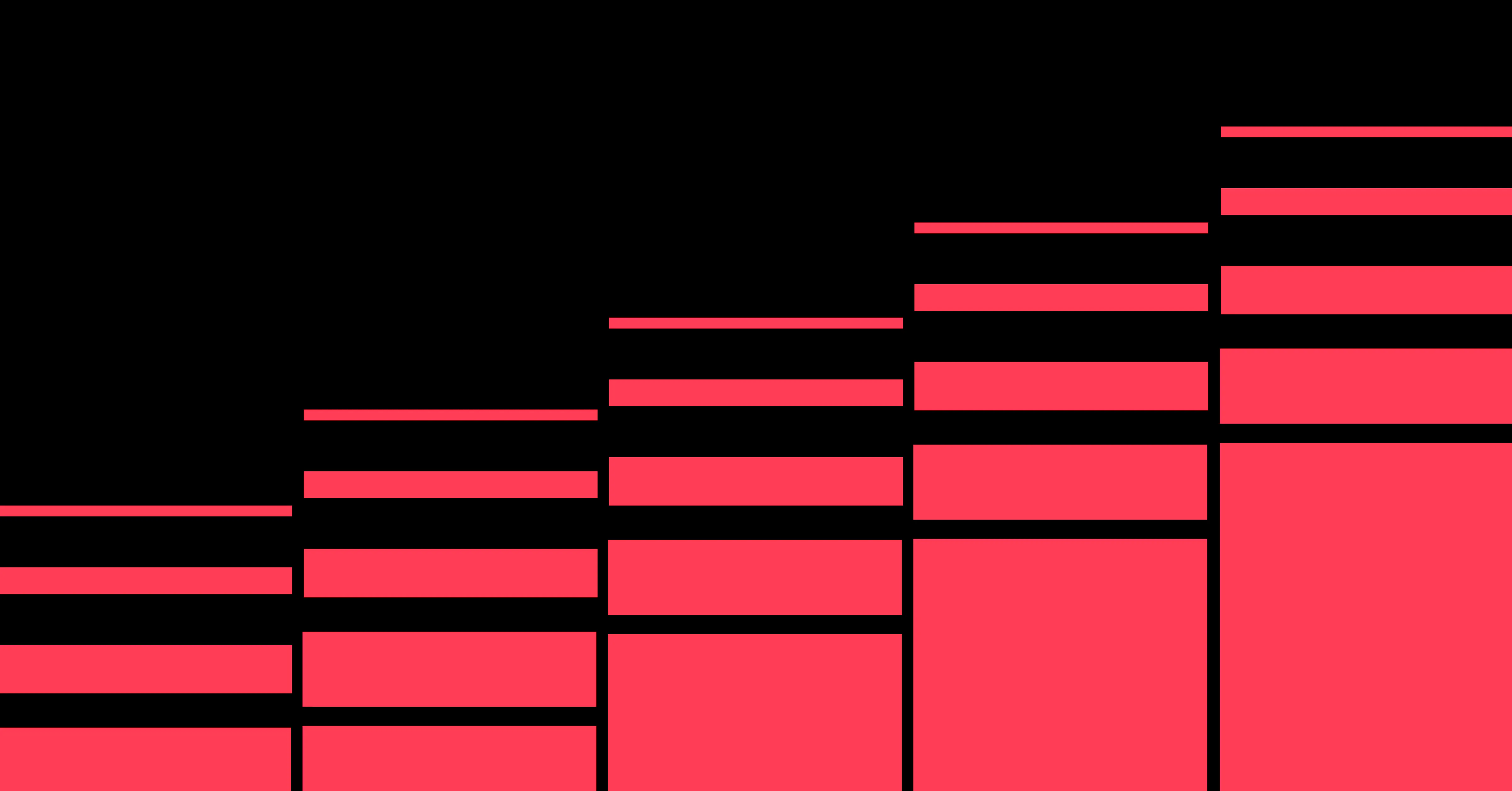

Unlock growth with SEO

Join Webflow and leading growth agency Graphite as they discuss actionable insights for scaling and achieving growth with SEO

How to create a robots.txt file in 4 steps

Here’s a straightforward process for creating a robots.txt file and uploading it to your site.

1. Create a new TXT file

Open a basic text editor like Notepad or TextEdit. Don’t use a word processor like Microsoft Word because it’ll use characters like curly quotes that crawlers don’t parse correctly.

Create a new file, name it “robots,” and save it as a TXT file. Remember that the name is case-sensitive, and it must be lowercase. If given the option, select UTF-8 encoding.

2. Write rules

Use the syntax guide above to write rules for the user agents you want to affect. Here’s a list of the most common user agents:

- Google:

- Googlebot

- AdsBot-Google*

- AdsBot-Google-Mobile*

- GoogleOther

- Bing:

- Bingbot

- AdIdxBot

- Applebot

- YandexBot

- DuckDuckBot

- Baiduspider

- Facebot

- Slurp

* These two web crawlers ignore the asterisk (*) user-agent value. Your TXT file must explicitly address these agents.

3. Upload the file

Upload your robots.txt file into your website's root directory. This process depends on your web development platform.

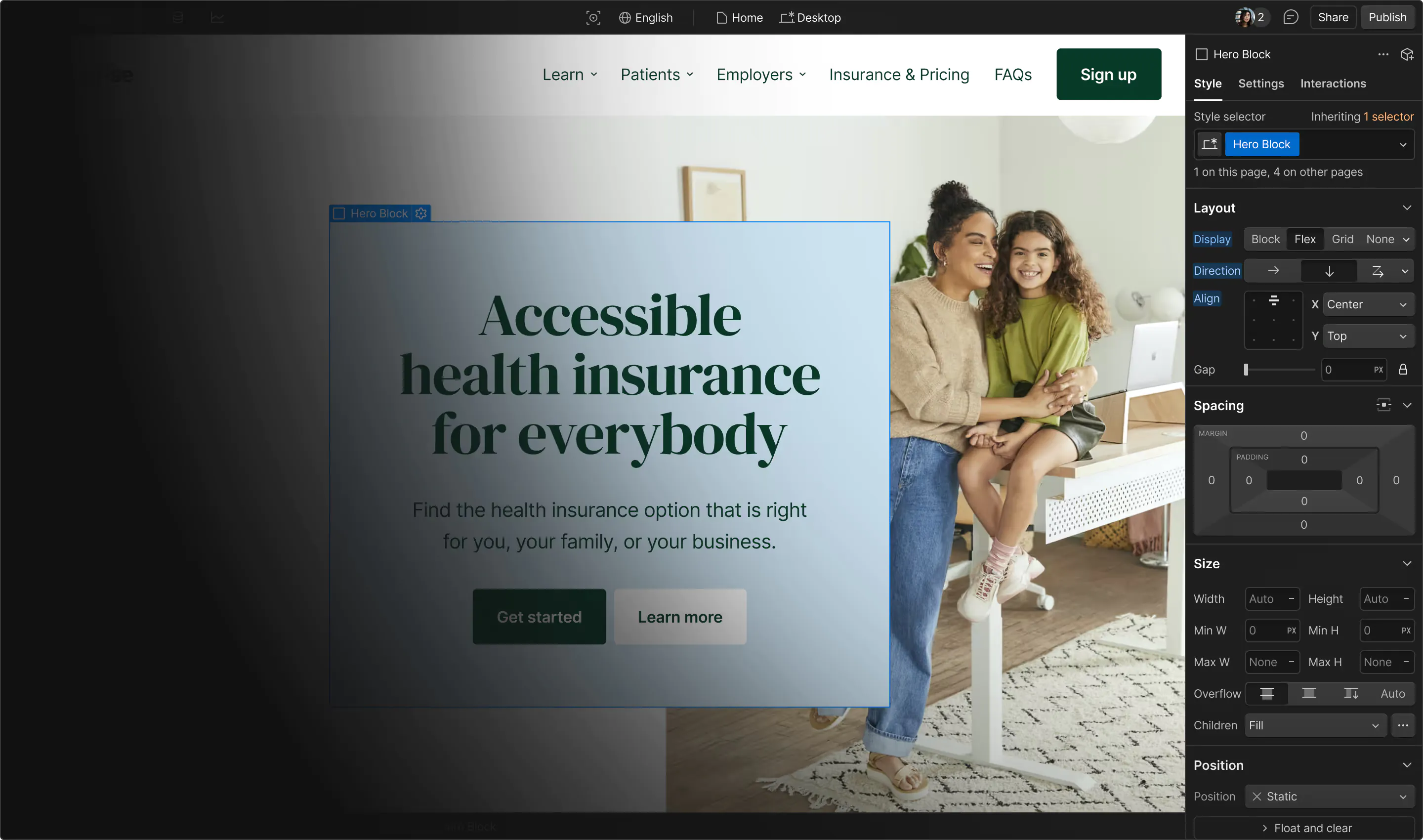

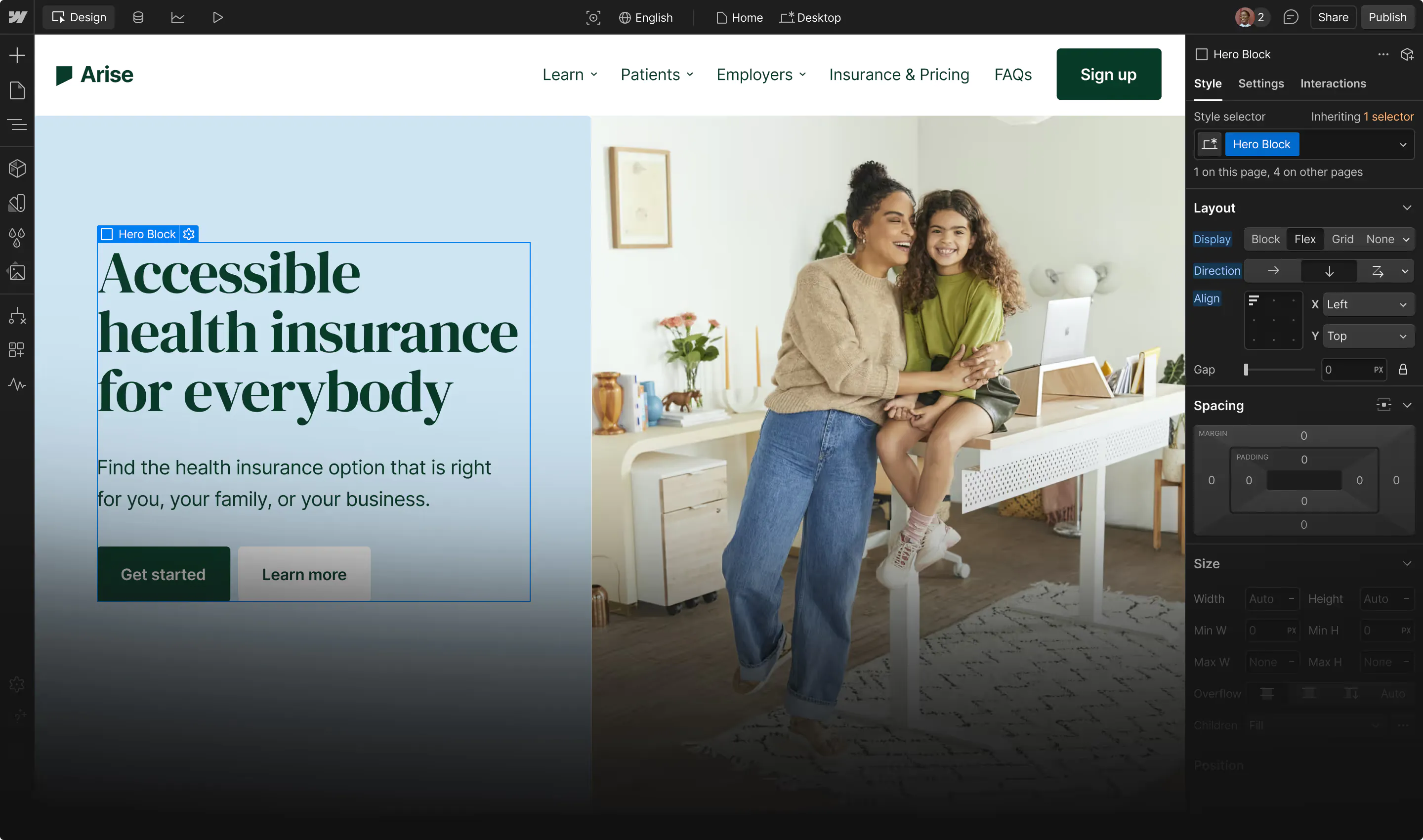

Webflow creates a robots.txt file automatically for your site when you create it. You can then add your robots.txt rules and sitemap to the file on the SEO tab under Site settings.

4. Test the file

To check whether your file is live, navigate to its URL in a browser window.

When you’re ready to test whether the file works, check various search engine tools like Google Search Console, which provide logs for each crawl of your site. Review those records to see whether the bots ignored the pages you excluded.

Limitations of a robots.txt file

Your robots.txt file will give you some control over how bots crawl your company’s site, but it does suffer from a few limitations. Here are the ones you’ll need to remember while writing rules for your file.

Not all crawlers are good

Bad actors create bots that ignore the robots.txt file so they can scrape your site for data or search for security vulnerabilities. Since these “bad bots” use the same processes as the good ones, there’s no way to prevent them from scraping your site without hindering the good crawlers.

Specific bot rules override general rules

If you have rules for specific bots, they will follow them and ignore the general ones. That means you’ll need to repeat any general rules that should also apply to them.

For example, with the following groups in your robots.txt file, Bingbot won’t crawl an HTML file called “no-bing.html,” but it will crawl the /no-crawl/ directory.

User-agent: *

Disallow: /no-crawl/

User-agent: Bingbot

Disallow: /no-bing.html

To fix that, add a new “Disallow” rule to the Bingbot group like so:

User-agent: Bingbot

Disallow: /no-crawl/

Disallow: /no-bing.html

Robots.txt controls crawling, not indexing

Crawling is the process of discovering and understanding web pages, while indexing involves storing and organizing that content to make it searchable.

A robots.txt file prevents search engine crawlers from crawling certain pages, but it doesn’t affect whether they index them. Thankfully, there’s an easy fix: To block a web crawler from indexing your page, use a noindex robots tag.

For example, even if you block a page in your robots.txt file, search engines could still show that page in results. But these platforms won’t have a description, image, or content to show in the result — they just have a title based on the URL slug. If you use noindex to block a web crawler from indexing a page, the search engine won’t even know the content is there, so it won’t appear in search results.

Worth noting: Google will only be able to see the noindex tag if you remove the robots.txt rule so it can crawl the page.

Disallowed files can still be crawled if referenced externally

Search engine crawlers don’t check the robots.txt file when following external links. If a bot crawls another website that links to a page on your site, it’ll discover and crawl it even if you set a disallow rule for it. As mentioned above, you'll have to place a noindex robots tag in the page's metadata to avoid this.

Optimizing robots.txt files with Webflow

A fine-tuned robots.txt file gives you more control over how search engine bots crawl your site, which helps you optimize your site’s performance and SEO. While the file itself is fairly lightweight, a lot of planning goes into its creation to ensure you’re excluding the correct files, creating consistent rules, and identifying the proper user agents.

With Webflow, you can create a robots.txt file for your site and use our built-in SEO tools to manage your website’s visibility in search engines. Use these features to establish a consistent, far-reaching web presence that drives traffic.

Get started with Webflow today to start taking advantage of these benefits.

Get started for free

Create custom, scalable websites — without writing code. Start building in Webflow.