Discover how web crawler optimization can lead to increased site traffic.

Search engines like Google and Bing use web crawlers — or spiders — to discover and index web pages on the internet. Ensuring your content is accessible and crawlable helps improve your rankings in search engine results pages (SERPs).

The higher your site ranks, the more visible it becomes, leading to increased traffic and more conversions. Read on to discover how to strategically optimize your content for crawlers.

What’s website crawling?

A web crawler is an automated program that browses, or crawls, the web, recording all the URLs it finds and downloading site content — including images, text, and videos. This process involves loading web pages, finding links on these pages, and following them. It continues this process until it can’t find any new links.

Search engines like Google use these crawlers to retrieve information. After retrieval comes indexing. Crawlers place all the content in the search engine’s content database, known as an index. It categorizes each page by things like the language of the page, content topics, and format.

When a user performs a search, the search engine returns the most relevant pages from its index to match the query. It uses complex algorithms to rank these pages based on factors like content quality and relevancy.

There are two types of crawls:

- Site crawls occur when a crawling bot searches your site for URLs. This type typically occurs when you send a sitemap to a search engine provider to request a crawl.

- Page crawls occur when a crawler is directed to open just one web page. This crawl updates the index for that page.

Be it a site or page crawl, the entire process follows these steps:

- Crawl request — Typically, crawlers crawl a site for a routine check-up or because the site’s owner submitted or updated their sitemap.

- Discovery — The crawler gets to work, opening pages, discovering links, and adding them to the queue.

- Add to index — As the crawler exhausts each link, it determines which pages it should add to the search engine's index. Then, it downloads those pages and adds them.

- Indexing — Search engines scan each page and notes essential details, such as keywords, synonyms, and important phrases.

- Ranking — Search engines then uses a proprietary algorithm to determine each page’s quality and authority. When a searcher enters a query, the engine delivers the most relevant search results for this keyword.

Web crawling vs. web scraping

Web crawlers move through your website to discover new URLs and download content, and web scrapers comb through a list of URLs to extract specific information.

The main differentiator between the two processes is intention. Web crawlers don't know what they'll find when they visit your site — they simply crawl through it and send information to indexing. But web scrapers extract data from your site based on predetermined fields such as "Publish-date," "Product-name," or "Price."

Typically, if someone scrapes a website, it's to pull information they already know exists. For example, you might scrape your site for mentions of a specific feature. If the feature changes, you can use this information to determine which pages to update.

Unlock growth with SEO

Join Webflow and leading growth agency Graphite as they discuss actionable insights for scaling and achieving growth with SEO

Why should you optimize your content for web crawlers?

Accurate crawling and indexing provide search engine users with a better browsing experience. Effective crawling also offers your team the following benefits.

Better visibility

According to 2019 BrightEdge research, search engines drive 53.3% of all website traffic. Web crawlers give search engines the information they need to drive a significant segment of your audience to your site. Offering clear sitemaps and including high-quality, relevant content helps you persuade crawlers and index algorithms to rank your content higher in SERPs.

A reward for freshness

Web crawlers detect how often you update your site to determine when to return to scan again. As you add fresh content, try different content types and formats to see which rank better. You can monitor this ranking progress with Google Search Console.

How to improve crawling and indexing: 5 tips

Here are five tips for effectively optimizing your site to improve crawling and indexing.

1. Optimize your page loading speed

Since bots rapidly churn through all the content on your site, they require more of your web server's processing power while active. Optimizing loading speed decreases the time it takes to crawl through your site, reducing server load.

Streamlining your code, limiting embedded content, and reducing third-party scripts and image sizes are all great ways to improve loading times.

2. Use a sitemap

A sitemap showcases all pages and media on your site, making it easier for bots to discover everything present. This file lives in your site's root directory, can be written in XML, RSS, or plain text, and must follow the universal sitemap protocol. Web developers and designers can create this themselves or use a platform like Webflow, which autogenerates sitemaps.

3. Optimize your internal link structure

Organize your site into a logical structure that cascades outward from your home page. That means your home page should link to primary topics that link to individual pages. Those pages should also link to each other when appropriate. These links make your site more accessible for users and bots to navigate, both critical factors in determining your search ranking.

Also check that none of your pages include dead or broken links, as 404 errors dock your ranking. To prevent these errors, perform comprehensive tests before you launch your site and throughout its lifecycle.

4. Use correct canonical tags

Canonicalization is the process search engines use to determine which page is the primary (or "canonical") authority. For example, you may have different versions of a page to target various geo-locations — each with a separate URL. Bots need to know which page is the central source for the content so they don't duplicate search results. To accomplish this, they analyze each page version to determine the duplicates.

To help search engines determine which canonical page ranks, only place the canonical page in your sitemap and use <link> elements in duplicate pages referencing the canonical page with a rel= "canonical" attribute.

5. Ensure all pages are crawlable

You can use Google Search Console to determine how much of your site crawlers have indexed — and how accurately. You have room to grow if they indexed anything less than 85% of your site.

There are some impressive SEO tools on the market that can audit your site for you, like Screaming Frog and Semrush. They’ll also offer tips for improving your sitemap, content, and SEO.

How to manage your crawl budget

A crawl budget measures how much time and resources search engines commit to crawling your site. Crawling millions of websites costs search engines processing capacity and storage, and the crawl budget determines how much of these resources to dedicate to a given website.

You want search engines to crawl your site often to pick up new pages as you publish them, but you don’t want them to slow down your site. To balance these conflicting goals, optimize your site to earn a high crawl demand while keeping your crawl rate limit feasible:

- Crawl demand — This demand determines how often search engines should crawl your site. If your content is popular and you regularly update it, you’ll earn a high crawl demand.

- Crawl rate limit — Crawlers measure how long it takes to load and download pages so search engines can calculate the cost of each crawl. The faster your site responds, the more generous your rate limit is.

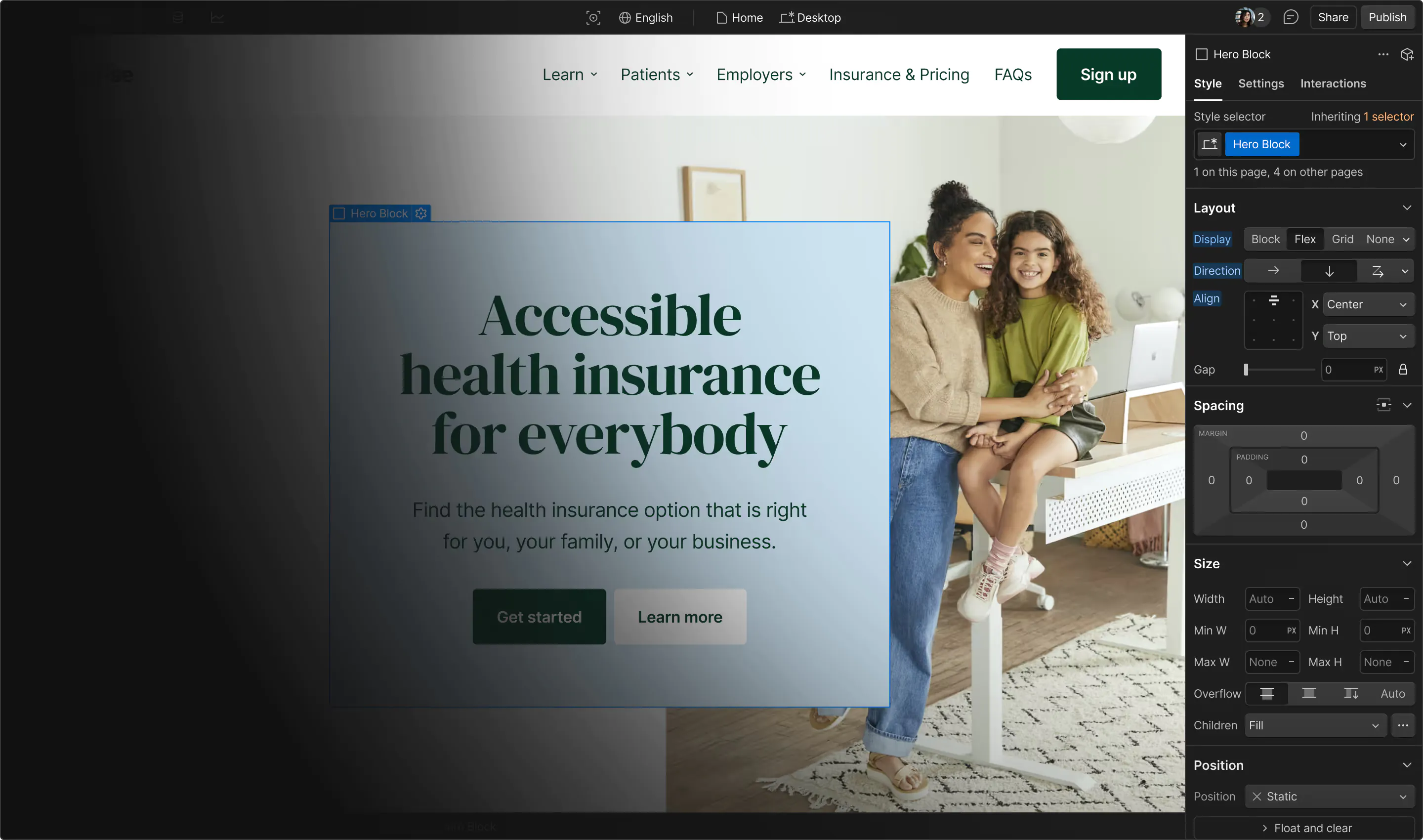

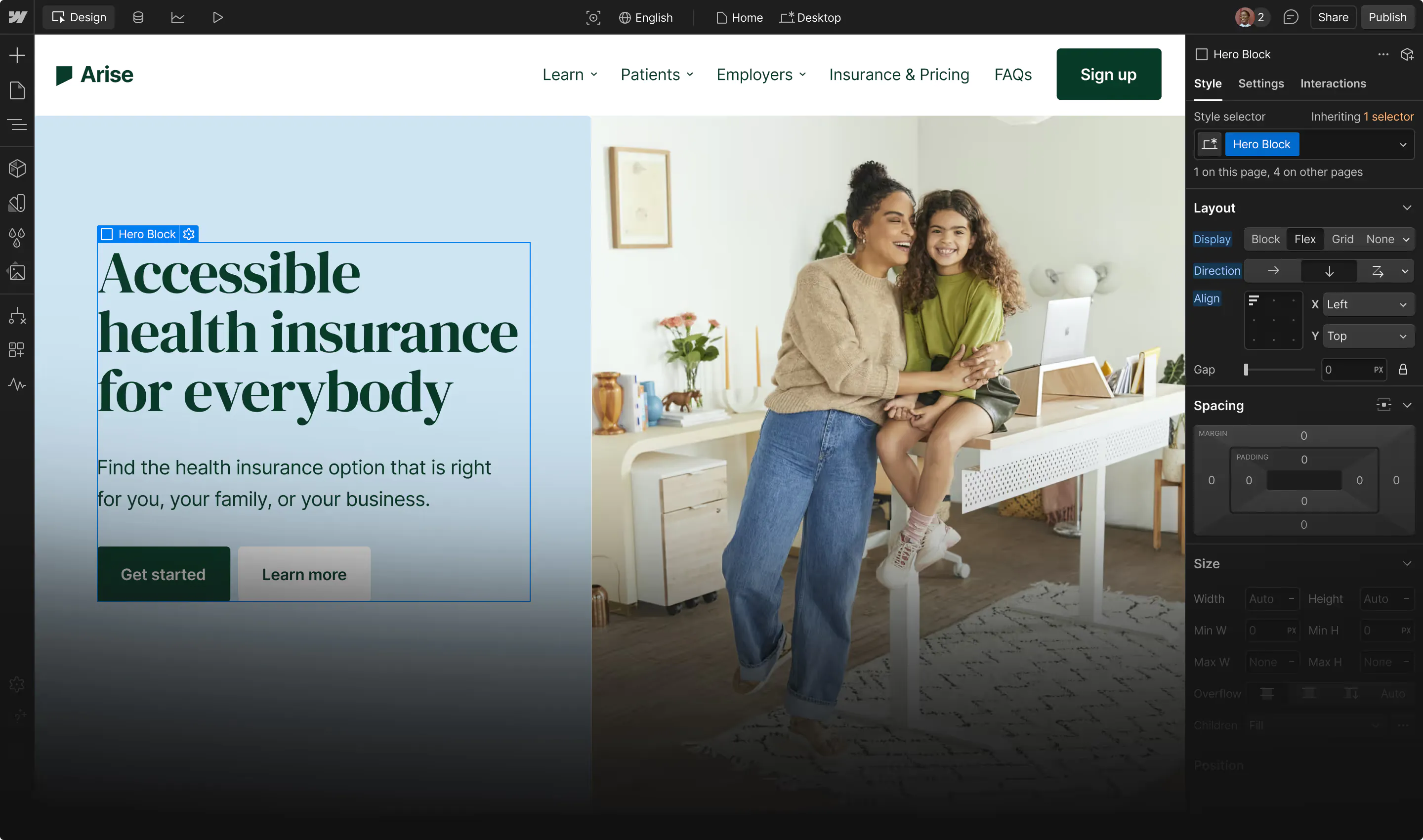

Enjoy crawler-friendly features from Webflow

Web crawler optimization is key to ensuring your site reaches its full organic traffic potential, and that takes clean, semantic code and a clear site and link structure. Webflow’s visual development platform takes care of that, while giving you the tools to fine-tune your SEO and create content that ranks.

Try Webflow’s visual-first development environment today.

Webflow Enterprise

Trusted by over 300,000 of the world’s leading brands, Webflow Enterprise empowers your team to visually build, manage, and optimize sophisticated web experiences at scale — all backed by enterprise-grade security.