The best way to validate your brilliant ideas is with a controlled experiment.

If you’re a designer or marketer looking to increase engagement, testing your ideas is essential. A/B testing gives you the data-driven insights you need to confidently optimize your app, website, or ad campaigns. By comparing variations, you can validate which changes drive real results, avoiding costly missteps.

In this guide, we’ll walk you through performing an A/B test and share best practices to ensure your experiments lead to meaningful improvements.

What’s A/B testing?

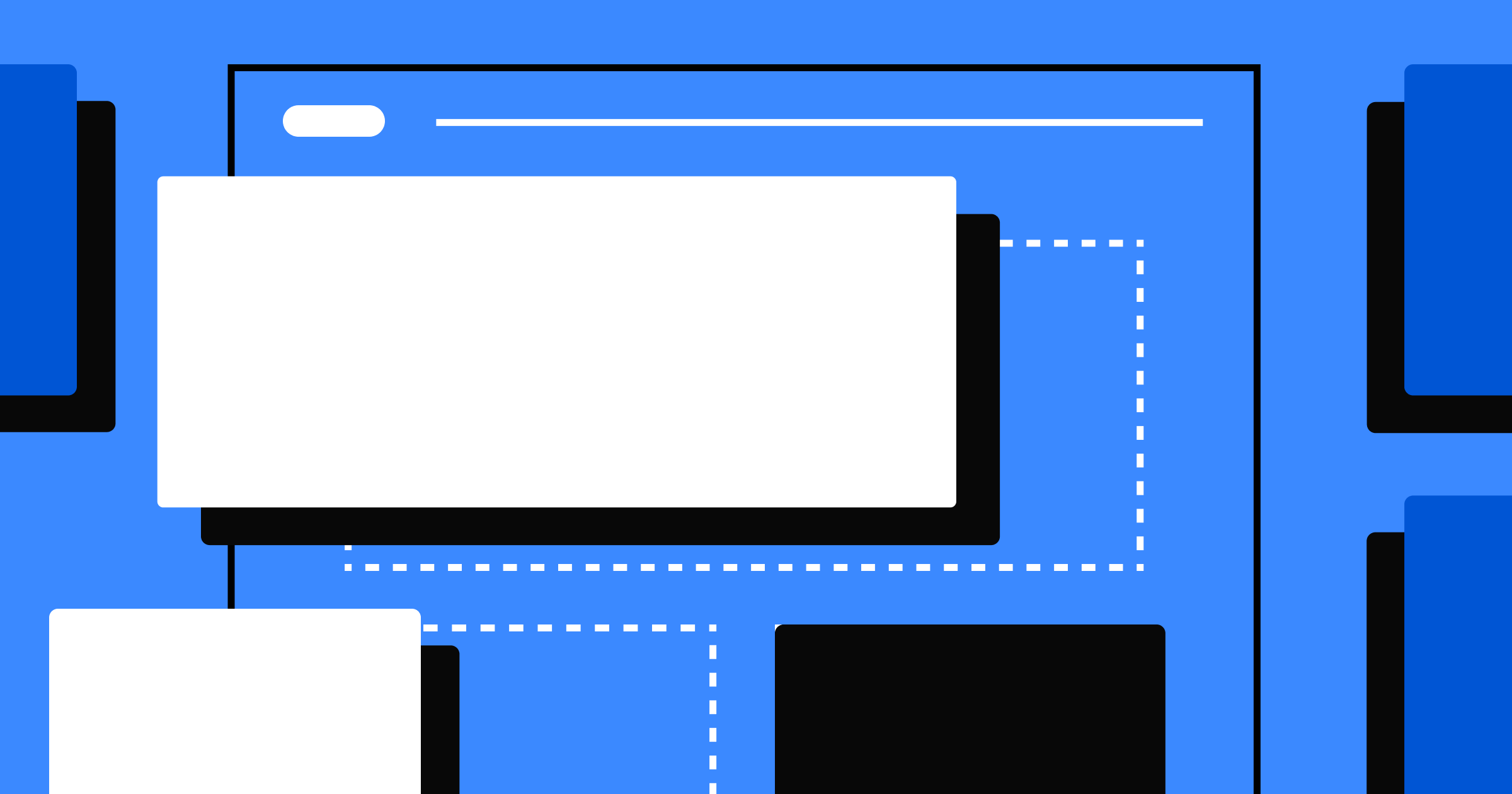

A/B testing, or split testing, involves dividing your audience into two groups and delivering each a different version of a product to see which performs better. It’s a controlled experiment that typically relates to web designs but can also apply to email campaigns, advertisements, and even mobile apps. Whichever medium you’re evaluating, the goal is usually the same: to find the version that offers better conversion rates.

During an A/B test, users interact with one product iteration as you observe and gather data on their experience. Metrics could be anything from how long they stayed on a web page to which calls to action (CTAs) they clicked.

These data points measure key performance indicators (KPIs) that indicate how engaging a product version is. Designers and marketers then use that information to determine which designs might lead to more conversions.

When should you run A/B tests?

An A/B test is useful whenever you need to determine if a design change would improve a product or website’s effectiveness. These evaluations are applicable to various mediums, such as:

- Website pages — Even subtle changes like making a button more prominent or adding a new feature highlight can improve page’s engagement and conversion rates.

- Mobile apps — Changing an app’s layout, branding, and functions can improve or degrade usability. A/B tests help designers measure those changes with real-world users.

- Advertisements — Split testing your advertisements can help you compare results to find the features, designs, and taglines that are most interesting to potential customers.

- Emails — Email campaigns often fail or succeed based on minute details. A/B tests can reveal and compare important distinctions, such as the most effective subject lines and CTAs.

Multivariate testing vs. A/B testing

The primary difference between A/B and multivariate testing is the number of variables you test. In a traditional A/B test, you change one variable at a time to discover if the new version works better than the original. That variable might be a subject line, a button’s color, or an advertisement's wording.

In a multivariate test, you alter multiple variables at once to determine how a combination of changes might impact results. For example, marketers may test if pivoting a web ad to focus on a new audience is a good idea. To do that, they can test the ad with a different image and tagline but keep the rest of the material the same.

How to perform an A/B test in 7 steps

How you perform an A/B test depends on the medium you’re testing. However, there are a few essential steps you must include in every test to form hypotheses, design tests, and measure results. Explore these seven fundamentals of A/B testing to get started.

1. Define objectives

First, decide what you want to accomplish with your test and identify the KPIs you’ll use to measure success. For example, if your goal is to increase an email campaign’s conversion rate, track the actual rate and set goals for micro conversions like open rate and click-through rate (CTR). These data points provide critical insights about where readers depart from the user journey.

2. Gather data

Assemble relevant data points about how well your current content is achieving objectives. Include conversion rates, open rates, CTRs, and anything relevant to your objectives. This data creates a benchmark for comparison. If you don’t already have user data, leverage analytics tools like Google Analytics or Webflow Analyze.

3. Formulate testing hypotheses

Now that you have established objectives and metrics for achieving them, form a hypothesis that clearly describes the change you intend to make and what you believe the result will be.

Here’s a basic template you can use:

“If we change ____ to ____, our ____ rate will increase by __%.”

For instance, some A/B test hypothesis examples include:

- If we change the CTA button color to orange, our CTR will increase by 3%.

- If we change the Home screen to include suggestions, our average session time will increase by 10%.

- If we change the subject line to be more concise, our open rate will increase by 5%.

4. Develop variations

Create the variations you intend to test. Then, run them by peers or colleagues in a mock A/B test to get input. Invite people to point out ways these variations could better suit the hypothesis. It’s always best to get another perspective on your work and refine it before spending time and effort conducting a true test.

5. Conduct the experiment

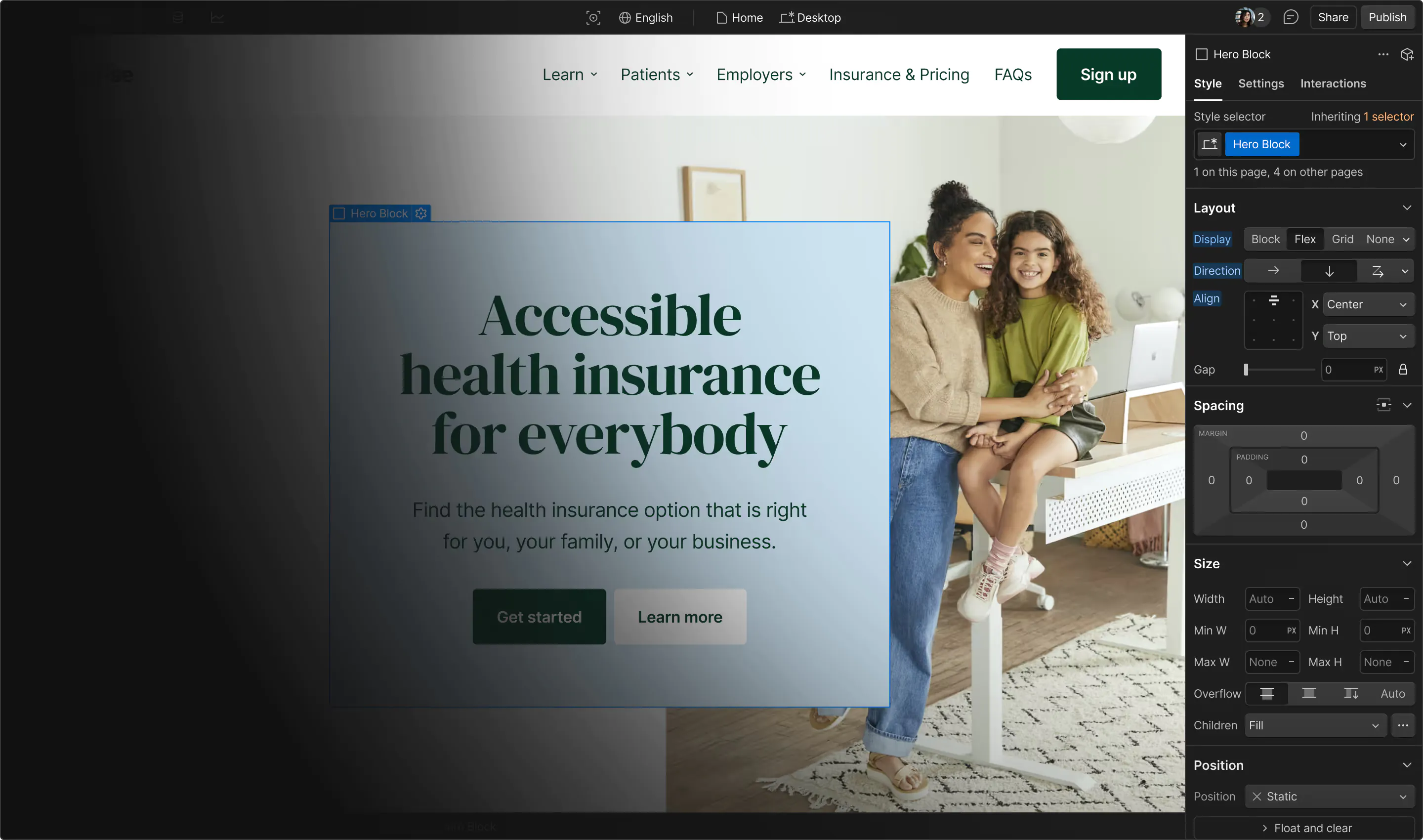

For websites and mobile apps, pick an A/B testing tool like Webflow Optimize to run your experiments. These tools help you decide how to segment users, and some offer additional analytics like heatmaps and surveys to help you surface insights about each version. You can use this extra data to supplement your existing analytics.

When you’re shopping around for an A/B testing software, make sure they mention how they handle the following best practices:

- Cloaking — Cloaking exploits A/B testing to show search engines one version of your page and users another. Tools should ensure their audience segmentation avoids this practice.

- 302 redirects — 302 redirects are temporary reroutes that tell search engines the link will return to normal soon so they shouldn’t index it yet.

- Canonical tags — Use canonical meta tags to let search engines know which page variation should be the source of truth.

If you’re A/B testing a digital marketing or email campaign, your platform or email service likely supports A/B testing already. Mailchimp, for example, offers robust A/B testing tools that let you try different email versions, and Meta provides built-in A/B testing to evaluate social media advertisements.

6. Gather results

Allow your A/B experiment to run long enough to gather sufficient data for statistically significant results. A good rule of thumb is to let your tests reach at least 20% of your monthly traffic before wrapping them up. This sample size is typically large enough to provide meaningful results with a high confidence level.

7. Evaluate findings

Refer to the objectives and hypothesis you formulated at the beginning and check your results to see if you hit your goals. Don’t worry if it turns out that your hypothesis was incorrect — even a negative result is valuable information because it helps eliminate potential changes that wouldn’t ultimately be effective.

According to 127,000 experiments performed by Optimizely, only about 12% of design changes make a positive difference. However, iterative testing is a highly effective way to discover which changes are worthwhile, so it’s still an essential process.

.jpeg)

9 B2B website optimization ideas that work

In this ebook, learn strategies to increase B2B website conversions

How to interpret A/B test results

When measuring the efficacy of variations, keep the following metrics in mind:

- Sales — For ecommerce sites, sales are the primary data point you want to increase because they’re the primary source of revenue. If a change causes your sales to grow, you’re on to something good.

- Subscriptions — Services like streaming sites and members-only blogs must drive more subscriptions to grow and maintain a foothold in their market, making subscriptions a key conversion metric.

- Clicks — A “click” can be anything from a CTA interaction to engagement with an interactive element. It’s a micro-conversion metric that measures how well your designs encourage users to interact with your content.

- Time spent — Whether you’re designing a mobile app or website, how long people use your product indicates how engaging it is. If this metric increases, you can assume your users see the value of spending time on your product.

- Number of visitors — Traffic is a crucial metric because it captures the number of impressions you’re making. As traffic increases, more people see your designs. The corresponding effect on other metrics like clicks and sales showcase whether new users are satisfied.

- Open rates — An email campaign’s open rate tracks how many recipients opened your email. That means it’s based almost entirely on your subject line, making it one of the few metrics with a clear cause for ups and downs.

- Click-through rates — The CTR of an email, website, or ad measures how many users used a CTA to move through your sales funnel. It’s another critical micro conversion you should examine to determine where you might be losing potential customers.

- Signups — People who sign up for your newsletter or register an account become new leads. If you’re generating more leads, that means you’re growing your audience.

Boost your website’s performance with Webflow

A/B testing is an incredible tool to optimize your website and convert more visitors into paying customers. This experimentation surfaces crucial insights about how design modifications can increase sales, subscribers, and traffic.

With Webflow, you can build and launch personalized experiences with native design and optimization features — all from one platform. Webflow Optimize, our AI-powered personalization and A/B testing solution, ensures that every visitor receives a tailored experience. Create and run multiple tests and personalization experiences simultaneously, all with a few clicks.

Try Webflow today and start building better user experiences.

Discover what performs best and deliver it at scale

Maximize conversions with rapid insights, tailored visitor experiences, and AI-powered delivery.