Tracking real-time analytics helps you proactively respond to user interactions.

Market trends, design innovations, and cultural shifts constantly shape user behavior. To stay ahead of the competition and enhance the customer experience, you need to adapt quickly to these changes.

The best way to iterate and adjust your website to changing trends is to use real-time data analytics tools. Unlike slower traditional analytics, live data streams let you adjust strategies and designs to match current behaviors and user preferences. Use your business intelligence, real-time insights, and adaptive strategies to respond to these changes with precision. This strategy keeps your digital experiences impactful for your customers.

What’s real-time data streaming, and why is it important?

Real-time data streaming is a live information feed that instantly processes and analyzes data as it becomes available. Data streaming tools transmit data without delay, unlike batch processing, where you collect data over time and process it as a group.

You can use real-time streaming to understand user behavior, system performance, and other data sources requiring urgent attention. This agile approach encourages quick decision-making, allowing your development and design teams to quickly adapt strategies based on the most recent information.

Here are a few benefits of real-time data streaming:

- Immediate trend insights — Batch processing delays can lead to outdated data and missed opportunities. Real-time analytics lets you react to trends quickly, which is critical in fast-changing sectors like news, finance, and marketing.

- Faster response times — With instant data processing, your teams can quickly address issues before they escalate.

- Predictive analytics — Live data reveals patterns in customer behavior, helping you anticipate demands, allocate resources effectively, and make well-informed product development decisions.

The makeup of real-time data architecture

Well-designed data architecture is crucial for extracting insights from rapidly changing data. This process involves the following architectural components to guarantee effective information flow.

Compiling data from multiple sources

Gathering data from diverse sources, such as social media feeds, transaction logs, and app activity, forms the foundation of real-time data architecture. Combining different sources creates a complete dataset for better analysis.

For example, a SaaS website might compile data from website traffic, browsing activities, and purchase histories, offering a holistic view of an average customer’s behavior.

Setting up a stream processor

This architectural component processes and analyzes data in real time. It modernizes outdated programs and merges internal and external data, making the information immediately relevant and actionable.

You can use a stream processor to detect fraudulent activities like unauthorized access or a cyber attack by analyzing current transaction data and flagging suspicious activity. This capability helps maintain up-to-date and secure systems in a fast-paced or vulnerable data environment.

Running real-time queries

Querying involves processing data to extract meaningful and usable information. Real-time querying makes this process instant, providing immediate insights from live data.

These queries can pinpoint trending topics or track key engagement metrics like ongoing user sessions, click counts, and scroll depth. The immediate data access speeds up decision-making and lets you dynamically respond to user interests and behaviors.

Supporting specific use cases

The final step is tailoring the processed data to specific applications, such as dashboards or key performance indicators (KPIs). Then, you can trigger automated actions based on set criteria and conditions.

For example, if a user repeatedly visits the support page, your site could automatically suggest they watch a tutorial video.

Considerations when implementing real-time data tools

Effectively using a real-time data tool requires technical know-how and a strategic approach. Key considerations include:

- Maintaining fast response times — Delays in data processing or reporting can affect the usefulness of real-time insights, so minimizing latency is key in real-time data systems.

- Managing growth potential — As data volume and complexity increase, scalability becomes critical to handle higher loads. To plan for future growth, stay on top of system architecture updates, and ensure your tool can handle expanding datasets and increased processing demands.

- Controlling costs — Legacy systems have outdated technology and software that can't support the current demands of real-time data processing. To futureproof your investment, opt for an analytics tool that can analyze continuous data flows, balancing initial costs with long-term benefits. Migrating your website to a platform that supports real-time analytics to balance the upfront expenses with the long-term benefits of enhanced data capabilities.

Unifying web design and data

Discover how using Hubspot's powerful CRM with Webflow can enhance customer experiences and drive your business growth.

9 real-time data streaming tools for insightful analytics

Despite the challenges, real-time analytics tools offer scalable solutions with a positive return on investment. Here are nine platforms with features that cater to various industries and workflows.

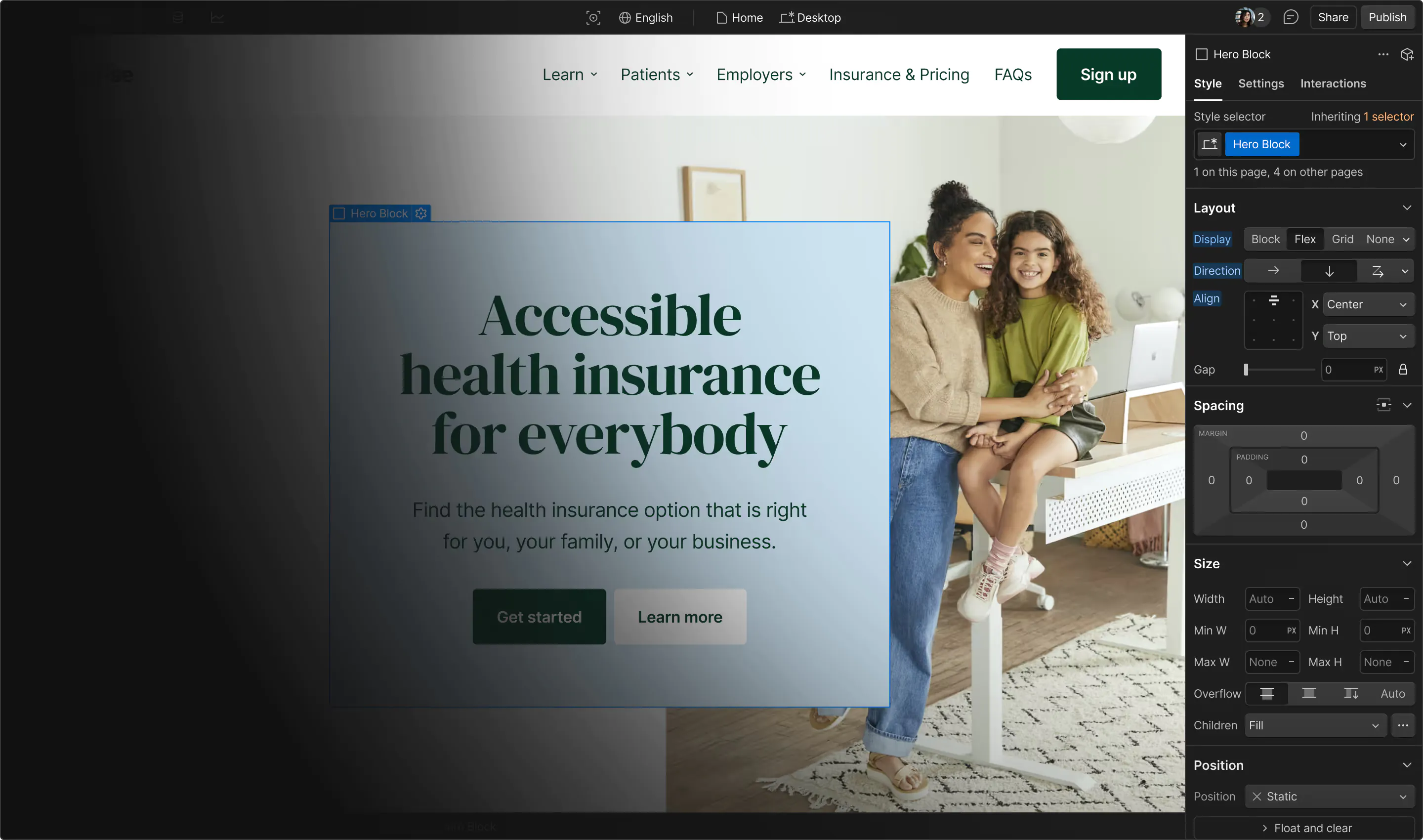

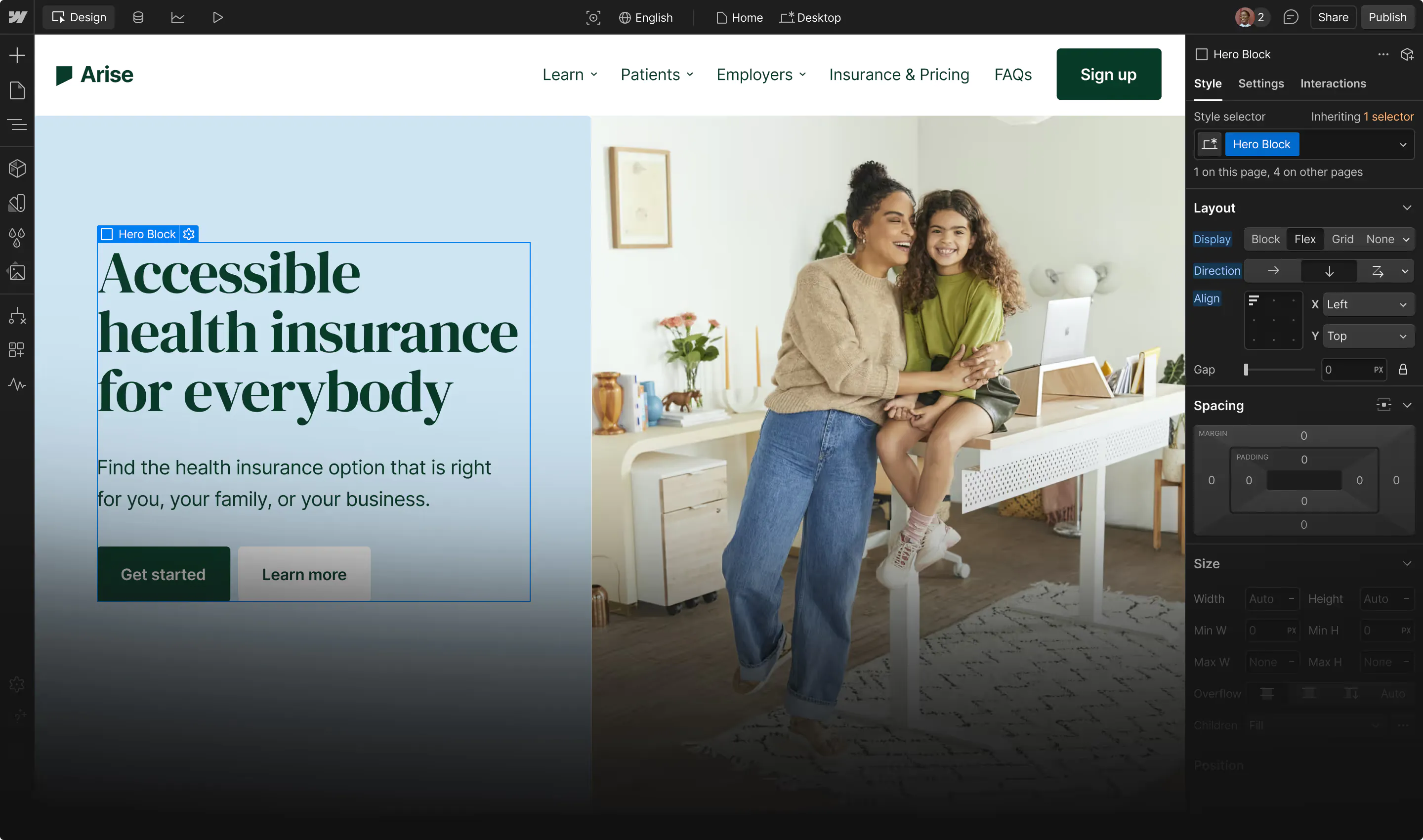

1. Webflow Analyze

Webflow Analyze is our native analytics feature that allows you to access in-depth metrics without setting up third-party tools. This solution removes the need for complex external configurations, making data analysis more approachable for Webflow users.

With a one-click setup and automatic event tracking, Webflow Analyze lets you monitor real-time data — with or without relying on developers — by processing and visualizing metrics with minimal lag. Your teams can instantly view page views, clicks, and user paths for data-driven decision-making.

Analyze provides personalized insights for various roles, from web designers to content marketers to general contributors. For example, designers can see which pages and elements perform best and make design changes based on actual user interactions. Marketers can gain insights into content effectiveness, allowing them to develop better advertising campaigns and strategies.

Webflow also offers built-in security features, complying with strict government data regulations and reducing the risk of unauthorized entities accessing sensitive information. With this comprehensive tool, you’ll never need to rely on third-party apps again, reducing your overall costs for analytics tools.

Best suited for: Individuals and teams that want an all-in-one platform that combines website building with up-to-date analytics.

2. Google Cloud Dataflow

Google Cloud Dataflow simplifies data streaming and processing pipelines, removing the need to manage infrastructure manually. It processes high-velocity data in real time, ensuring fast and reliable performance with minimal intervention.

As a cloud-based solution, it automatically scales to match growing data demands, making it suitable for businesses with fluctuating workloads. And the platform’s pay-as-you-go pricing model lets you only pay for what you use without unnecessary expenses.

Best suited for: Small to large enterprises requiring a straightforward data processing tool without the complexity of infrastructure management.

Pricing: Custom, pay-as-you-go quotes

3. Amazon Kinesis

Amazon Kinesis, a cloud-based data streaming analytics platform within the Amazon Web Services (AWS) ecosystem, delivers real-time insights with minimal latency. Kinesis seamlessly integrates with AWS services, supporting scalable growth without performance bottlenecks. While it offers flexible pay-per-use pricing, costs can rise with heavy data usage.

Best suited for: Organizations using AWS solutions looking for an integrated tool for data processing and cross-functional workflows.

Pricing: Custom, pay-as-you-go quotes

4. Apache Kafka

Apache Kafka is an analytics tool known for its scalability and fault tolerance — the ability to continue operating despite interruption or component failure. It uses a distributed architecture to create data pipelines that instantly transmit and process data in real time.

As an open-source business analytics tool, Apache Kafka is free and allows customization to align with specific organizational requirements. For instance, teams can integrate it with existing data systems, support large-scale data processing needs, and tailor data handling for unique operational workflows and industry-specific demands. And since it’s open source, Kafka has an active community of developers who regularly create updates and add-ons for the platform, helping you scale alongside the tool.

Best suited for: Enterprises that value high fault tolerance and an open-source solution supported by an active community.

5. Apache Storm

Apache Storm’s low-latency response times make it suitable for real-time analytics and fraud detection. Its high-speed data processing supports rapid decision-making, especially in high-stakes industries like finance and cybersecurity. The platform scales horizontally, accommodating increasing workloads as your business grows.

Being open-source, it’s free and compatible with multiple programming languages, including Java and Python. However, setting up and managing infrastructure requires additional resources like developers.

Best suited for: Companies requiring low-latency data processing, especially in the finance, ecommerce, and cybersecurity industries.

Pricing: Free

6. Apache Flink

Apache Flink supports real-time (stream) and historical (batch) data processing, allowing companies to analyze current data alongside previous trends.

Its architecture enables low-latency, high-throughput processing, meaning it can quickly handle large volumes of data and scale efficiently. This capability is helpful for scenarios where prompt responses are critical, such as fraud detection or live recommendation systems.

While it's open-source, you'll have to pay for CPU options, disk space, and brokers.

Best suited for: Enterprises with high-volume data streams that require both real-time and batch processing for their databases.

Pricing: Custom quote depending on the plan and number of brokers

7. Azure Stream Analytics

Microsoft's Azure Stream Analytics interface integrates with other Azure products and services to offer up-to-date insights and efficient scalability. And its visual query language allows users with less technical experience to create complex queries, broadening access across teams.

This tool processes and visualizes data instantly, encouraging quick decision-making. While its pay-as-you-go pricing is flexible, costs can rise with high data volumes.

Best suited for: Businesses using Microsoft Azure Cloud services, especially those looking for a visual interface for real-time data processing and analytics.

Pricing: Custom, pay-as-you-go quotes

8. IBM Cloud Pak for Data

Like other analytics tools on this list, IBM Cloud Pak for Data offers end-to-end data streaming. It also provides advanced features like artificial intelligence (AI) and machine learning and integrates with other IBM services.

This tool offers enterprise-grade security features for companies with strict data security and compliance requirements. Those in industries that rely on robust data protection — like government agencies and financial institutions — will find this platform useful, especially when scaling data operations.

Best suited for: Enterprises prioritizing advanced analytics, integration with IBM’s other features, and security measures that meet compliance standards.

Pricing:

- One-month contract, Standard Option starts at $19,824 per month

- One-month contract, Enterprise Option starts at $59,400 per month

- 12-month contract, Standard Option starts at $237,888 per month

- 12-month contract, Enterprise Option starts at $712,800 per month

9. Splunk

Splunk has data analysis and visualization capabilities, turning complex information into business intelligence graphs. This feature is useful for teams without extensive technical knowledge who want to interpret data quickly for faster response times.

The platform also specializes in log monitoring, using AI to detect activities like the number of failed logins. Other features include tracking system health, proactively identifying cyber threats, and ensuring compliance with web security standards.

Best suited for: Companies with strong data security needs, especially those who prefer visual graphs for log management and other security-related activities.

Pricing: Custom quote depending on the plan

Leverage real-time data with Webflow

Instead of just analyzing past trends or reports, real-time data gives you up-to-the-minute insights. This capability allows you to quickly adjust your strategies, optimize website performance, and respond to customer behavior as it happens.

Webflow Analyze improves this process — your designers and marketing teams can access insights directly within the platform to monitor numbers and optimize content.

From tracking visitor behavior to identifying top-performing pages, Webflow Analyze gives you the data you need to improve your website's performance. Learn how Webflow can empower your teams to iterate and build faster.

Build with Webflow

Webflow Enterprise gives your teams the power to build, ship, and manage sites collaboratively at scale.