Sharding large, production datasets is rarely a single event—it’s a careful, incremental process. At Webflow, we migrated hundreds of millions of documents from a vertically scaled database to a sharded architecture using adapter layers, queues, and idempotent jobs. Here’s how we did it without downtime or data inconsistency.

Like many growing platforms, Webflow’s data architecture has evolved over time as scale and reliability requirements increased. Early on, a single database handled core workloads with vertical scaling (adding CPU, memory, or storage) providing ample headroom and growth with relatively low operational overhead. This approach served the platform well with its simplicity and fast iteration, even as redundancy and additional database clusters were introduced to support expanding product and infrastructure needs.

Over time, sustained growth in data volume and throughput exposed the practical limits of vertical scaling. Hardware costs rose, performance improvements began to plateau, and operational risk grew as clusters grew in size. In Webflow’s case, one of our document cluster’s max replica set had a 4TB limit set by the vendor, placing a hard ceiling on how far the cluster could scale vertically. That cluster served as the system of record for a large portion of the platform’s unstructured data, including site DOM, components, styles, and interactions, and its collections continued to grow steadily. While sharding was a known future requirement, and progress had already been made toward migrating site data, other scalability and reliability investments took priority as part of a broader, multi-year evolution of the platform.

As the cluster approached its upper scaling bounds, those theoretical limits became tangible operational risks. Recovery from failures grew slower and more complex, with increasing data volumes expanding the blast radius and extending recovery timelines. What had once been a forward-looking scalability consideration became an immediate reliability concern. In response, we launched a focused migration effort to extract the four largest collections from the document database, reducing its overall size by more than half, and laying the foundation for the sharded architecture described in the rest of this article.

Migration strategy overview

We evaluated several strategies for executing the migration, including big-bang, on-demand, and trickle approaches. Ultimately, we chose a trickle migration for a few key reasons:

- We needed to maintain product stability and achieve zero downtime

- We wanted to validate the migration incrementally, with clear safeguards and rollback paths (spoiler: we didn’t need them)

- An on-demand approach was not viable, since many sites are inactive and would never naturally trigger a migration, preventing full cleanup of non-sharded collections

To support a trickle migration, we introduced an adapter layer, or an abstraction that allowed the application to remain agnostic about whether data lived in the sharded or non-sharded database. This ensured that not all sites had to query the same database at the same time. The interface itself also evolved, since querying the sharded database now required a shard key.

For example, fetching DOM nodes in the legacy abstraction looked like the following:

import * as fullDom from 'models/fullDom'; // our DOM model module

// Fetch the DOM data from the page ID

// Assumes all page IDs are unique

const node = await fullDom.getPage(pageId); With the new approach, it became:

import {DomAdapter} from 'dataAccess/dom/domAdapter';

import {DomGetPage} from 'dataAccess/dom/domDbAction';

// Asynchronously generate our adapter layer based on which DB it should call

const domAdapter = await DomAdapter.create(

siteId // our shard key

);

// Use the same parameters as before in a type-safe query API with a shard key adapter layer

const dom = await domAdapter.getPage(new DomGetPage(pageId));Under the hood, the DomAdapter layer was responsible for:

- Transparently determining whether to query the sharded or non-sharded database, based on a site’s migration metadata

- Issuing sharded queries using the resource’s parent site identifier (site ID) as the shard key, which aligned naturally with access patterns since most queries are site-scoped

Once adapter layers were in place for each collection, every callsite had to be updated to use the new interface. Some migrations were straightforward; others required dozens of changes and the addition of thorough test coverage to ensure correctness.

Migration system

At the time this project was scoped, Webflow did not have a comprehensive system for large-scale data migrations. The standard approach was to spin up a single ephemeral Kubernetes pod and run migration scripts for as long as needed. Given the scale of Webflow’s datasets, often involving hundreds of millions of documents and terabytes of data, this frequently resulted in migration that ran for weeks, required manual progress tracking, and demanded sustained operational oversight.

As part of this initiative, we wanted to build something that could operate at a significantly higher scale, with better observability, fault tolerance, and automation.

A new migration system, right on queue

Webflow already uses Amazon SQS extensively for asynchronous workloads such as site publishing and AI site generation. Over time, we’ve built abstractions and infrastructure that make it easy to define queues and batch workers for new use cases. Leveraging this existing system was a natural fit for large-scale data migration.

The primary challenge was enqueueing tens of millions of jobs efficiently, given that SendMessageBatch is limited to 10 messages per request. To work around this, we implemented a fanout pattern using an additional SQS queue:

- An initial async request (

initFanoutRequest) enqueues a range of site IDs to be migrated - These identifiers are grouped into batches and submitted as

fanoutBatchOfSitesjobs to a sharded fanout queue - Each batch is then split into individual site-level jobs and enqueued into a sharded migration queue

- Migration workers process these jobs, applying ETL logic to migrate data into the sharded database

Migration job design

Each migration job was implemented as an idempotent, single-responsibility task that migrated an individual site’s collection resources and updated the migration metadata used by the adapter layer to resolve the correct source of truth. The migration primarily performed a direct copy of non-sharded data, while selectively transforming certain identifier fields from string values to ObjectId types to improve storage efficiency and query performance, and appending the site ID shard key to better align with site-scoped access patterns. By isolating each migration job to a single site, migrations could be safely retried or resumed after interruptions such as restarts, timeouts, or deploys, without leaving behind partial or inconsistent state.

Even after a successful migration, we retained a rollback mechanism as a safety net. This allowed a site to be reverted back to the non-sharded collection through the same fanout pipeline, restoring the original documents as the source of truth if post-migration validation or operational concerns arose. Fortunately, no such mechanism was ever required.

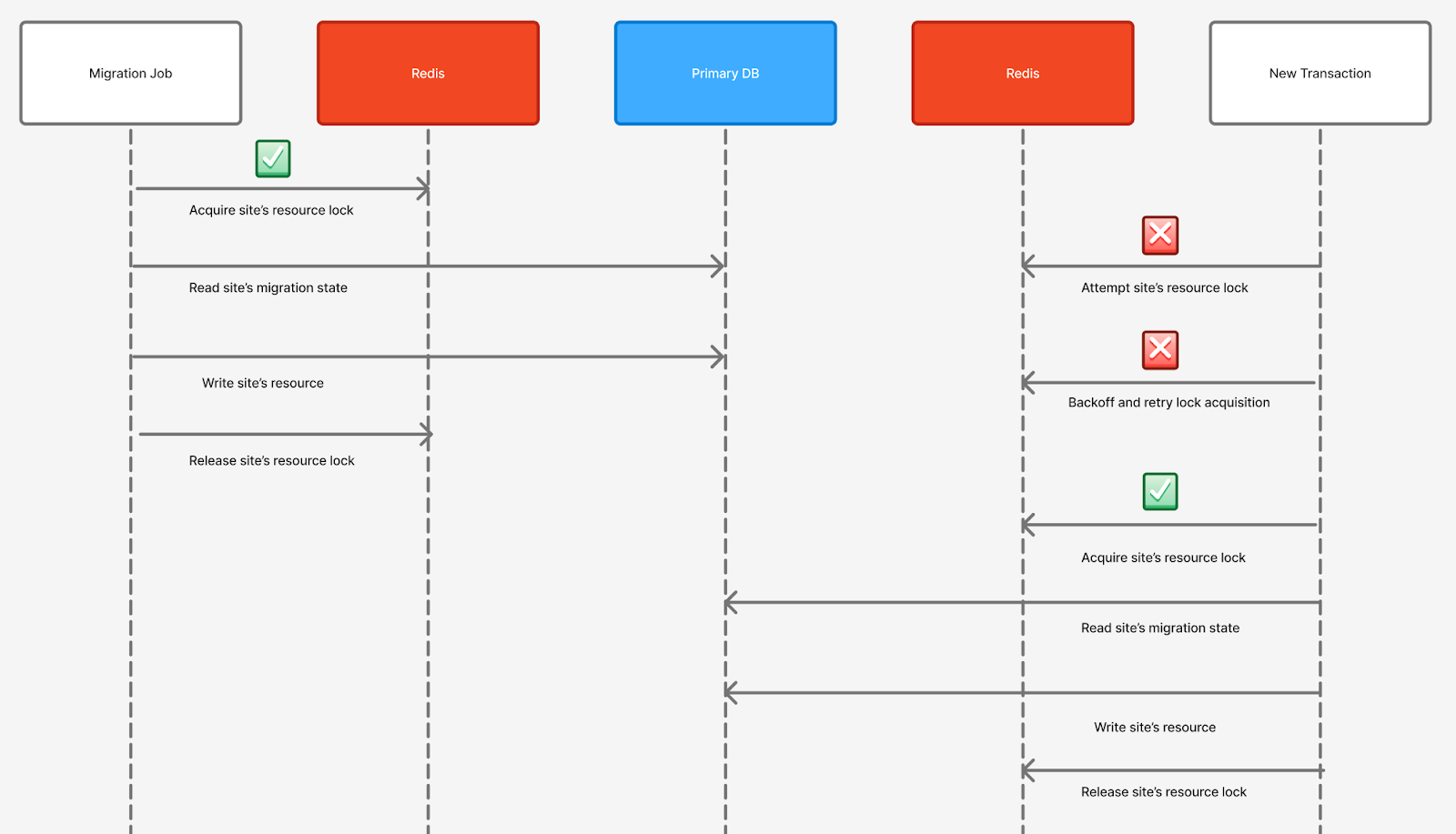

Concurrency and data consistency

While migrations were in flight, there was a risk of race conditions if another client attempted to write to the same non-sharded documents. Without proper coordination, this could result in data corruption or inconsistent state between competing transactions. To address this, we implemented a Redis-backed locking mechanism to guarantee data consistency. The first write operation acquires the lock, and subsequent writes block until the lock is released. Lock scope was intentionally narrow, covering only the operations required to read migration state metadata and write the corresponding resource data.

Locking introduces its own risks, including deadlocks and lock contention, which can degrade performance or cause hard failures. To minimize risk exposure, locks were applied only under narrowly defined, dynamically controlled conditions: a site ID had to fall within a predefined ObjectId range, and the write path had to target the collection actively undergoing the migration. This, in effect, created a sliding window of site IDs eligible for locking at any given time. Because the batch fanout design dispatched sites in ascending ID order and could resume from any checkpoint, we were able to target specific site ID ranges and limit locking to a small, controlled subset of sites. The approach ensured that strong consistency guarantees were preserved during active migrations while keeping the performance and reliability impact on the broader platform tightly contained.

Outcomes and impact

The most immediate outcome of this project was a substantial reduction in the size of Webflow’s primary database. By extracting the four largest collections, we reduced the overall data footprint by more than 50%, removing more than 2TB of data and hundreds of millions of documents from the main cluster. This materially lowered read and write throughput on the primary database, reduced operational risk by shrinking blast radius and recovery timelines, and improved steady-state performance across the platform. Some collections saw latency improvements ranging from 10% to 45% across core database operations. These gains were largely driven by a smaller working set and reduced index pressure on the primary cluster, decreasing contention on shared resources like CPU, disk I/O, and replica set coordination, and lowering tail latency for common read and write paths.

Beyond these short-term wins, the migration validated the long-term benefits of horizontal sharding as Webflow continues to scale. We established internal sharding guidelines, removed constraints imposed by a single database instance, and restored headroom for future growth. Just as importantly, the adapter layer created a repeatable blueprint for sharding additional collections, and the migration pipeline evolved into a migration-agnostic, battle-tested framework now reused for other large-scale data migrations without disrupting production workloads.

We’re hiring!

We’re looking for product and engineering talent to join us on our mission to bring development superpowers to everyone.

.jpg)